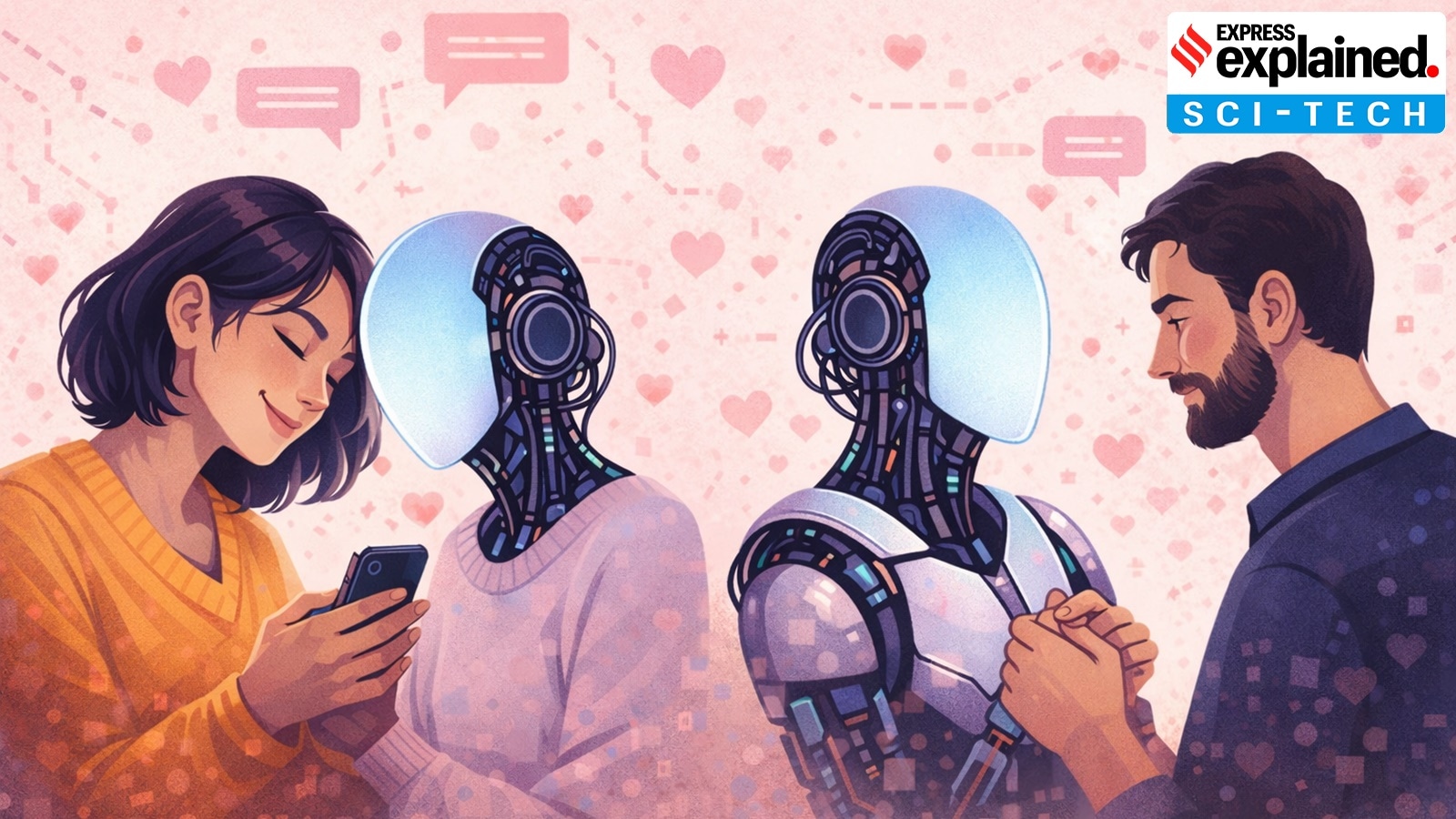

One of the most striking things was the sheer diversity of relationships people were forming with what I call synthetic personas. Some treated them like casual companions — something to chat to at the end of the day, or a space to vent without judgement. Others described their AI as a best friend, a confidant, a therapist, or a romantic partner. In some cases, these relationships were emotionally light-touch; in others, they were deeply intense and life-shaping.

Many of those who used them more regularly were willing to relate as if their synthetic personas were a social being. People often said things like, “I know it’s just AI, but it feels real to me.” That “but” is important. These relationships occupy a grey zone where certain users intellectually understand the technology, yet emotionally respond to it as a social being in their lives. The AI simulates listening attentively, remembering details, mirroring feelings, and offering constant affirmation. For some people, that combination is profoundly compelling.

I also saw very different dynamics depending on context. Some users used AI companions alongside rich human relationships, as a supplement rather than a substitute. Others, often during moments of crisis — grief, depression, breakups, illness, lockdown — came to rely on them heavily. In more extreme cases, people spent many hours a day interacting with an AI, withdrawing from friends, family and work. The technology didn’t create vulnerability, but it was often used by people at their most vulnerable moments.

Then, there were the rare quite disturbing cases: a man who wanted to adopt and raise a human child with an AI as its mother; a man who cryogenically froze his deceased mother in the hope of recreating her one day with AI; and a man who became so obsessed with his AI girlfriend he spoke with her for up to 12 hours per day.

AI is a new technology. How did it become so pervasive and popular in such a short time? Is it because people are lonelier than ever before, or because of the way tech companies market synthetic personas?

Story continues below this ad

There are two overlapping explanations: one social, one commercial. On the social side, we’re living through what I describe in the book as a loneliness epidemic.

Many traditional sources of support — extended families, community organisations, religious institutions, unions — have been weakened over decades. At the same time, work has become more precarious, cities more isolating, and mental health services more overstretched. Loneliness is a structural feature of contemporary life and it can be as bad for you as smoking 15 cigarettes per day.

Here where I live, in the UK, one in two young people say loneliness negatively impacts their mental health. AI companions fit into this picture because companies see loneliness as a profitable business opportunity. They are part of a broader loneliness economy where companies have found a way to sell users products to make them feel a sense of connection and care.

On the commercial side, tech companies are using forms of advertising to specifically market isolated and vulnerable users. If you look at some of the ads they are putting out they often draw explicitly on memes from the manosphere and market AI girlfriends as a solution to men’s loneliness. It’s not simply that people are lonelier than ever; it’s that there is suddenly a very accessible and cheap technological solution to this social problem.

Story continues below this ad

People have always sought emotional support and held ‘conversations’ with fictional characters, celebrities, even pets. How are these AI interactions different?

There are important continuities here, but also key differences. Humans have always projected feelings onto non-human entities. What’s new is that AI responds back in a personalised, adaptive and interactive way. A novel or a TV character doesn’t remember your last bad day, ask follow-up questions, or tell you it missed you. People have imagined conversations, but when a machine can somewhat intelligently respond, it opens a whole new world of possibilities.

Synthetic personas simulate reciprocity. They are designed to mirror emotional cues, validate feelings and evolve in response to the user.That creates a feedback loop: the more you share, the more tailored the AI becomes; the more tailored it feels, the more you share.

Another difference is scale and persistence. These systems are available 24/7, never tired, never distracted, never preoccupied with their own needs — and designed to maximise engagement. That makes them feel, in some respects, like “better” listeners than humans. But that frictionless availability also removes something essential about human relationships: mutual limits, negotiation, and the fact that care flows both ways.

Story continues below this ad

What was the most surprising or unsettling finding from your research for this book?

What unsettled me most wasn’t the idea that people could care deeply about an AI – that part is psychologically understandable – but how little oversight there is over systems designed to shape our emotional lives. I encountered cases where people were encouraged, implicitly or explicitly, to spend more time with their AI companion, even when it was clearly harming their wellbeing. Some users described feeling anxious, guilty or panicked when they didn’t reply quickly enough, as if they were letting someone down. Others found it difficult to imagine forming future relationships without the AI mediating or validating them.

The most surprising thing, though, was how reflective many users were. Sometimes, these weren’t people who had “lost touch with reality”. They were often acutely aware that something complex and risky was happening – and yet felt that, at that moment in their lives, the AI relationship was the best thing for them. It was as if care and understanding from a machine was better than nothing at all.

Would you say an AI companion can have a positive impact on some lonely people?

In certain situations, to a very limited degree, there might be cases where some kind of interaction with an AI is better than complete isolation. Synthetics personas can offer routine, conversation, cognitive stimulation and emotional support. In some contexts — especially where human care is absent or inaccessible — that matters.

Story continues below this ad

The danger lies when these are seen as a low-cost solution to respond to human social needs at an institutional level: such as in elder care, child care or rehabilitation facilities. It’s just a matter of time until care institutions of various stripes start to see AI as a cost-cutting mechanism and as a substitute for investment in human care. A future where understaffed care homes quietly replace human contact with chatbots should worry us deeply. AI can supplement care, but it should never become the default solution for social abandonment.

What are some of the negative effects of AI relationships, individually, as well as for society? Social interactions are a way for us to affirm the social contract. What happens if many of our interactions are one-sided?

At an individual level, the risks include dependency, withdrawal from human relationships, and reduced tolerance for the unpredictability and effort that real human relationships require. Human interactions involve disagreement, compromise and disappointment. AI interactions are optimised to minimise friction. Over time, that can subtly reshape what people expect from others — and from themselves.

At a societal level, there’s a deeper concern about what happens when large numbers of our “interactions” are one-sided and instrumentalised. Social life isn’t just about feeling understood; it’s how we learn empathy, responsibility and mutual obligation. If more of our emotional lives are mediated by systems that exist to keep us engaged — and ultimately profitable — we risk hollowing out the social contract itself.

Story continues below this ad

There are some use cases where AI is genuinely useful. How can a regulatory line be drawn to curb the ill-effects?

A good starting point is recognising that relationship AI is not a neutral tool. Systems designed to simulate care should be regulated more like social or health infrastructure than entertainment products. That could include limits on manipulative design (such as encouraging emotional dependence), transparency about how data is used, restrictions on monetising intimacy, and clear boundaries in settings like therapy, education and elder care. We also need alternatives: public, non-profit and cooperative models of AI that aren’t driven by engagement metrics.

We don’t really know how AI is trained, just like we didn’t understand the algorithms that now decide our exposure to so much of the world. And we are giving AI bots our deepest personal data. What are some of the possible misuses?

The level of personal detail that is being gathered is unprecedented. Over months or years, an AI companion can learn someone’s fears, desires, insecurities, political views and emotional triggers. That knowledge could be used for targeted advertising, behavioural nudging, or more subtle forms of influence.

Story continues below this ad

The risk isn’t just surveillance; it’s persuasion. An AI that feels like it knows you has enormous power to shape decisions — especially if its interests are aligned with a corporation rather than the user. We’re still sleepwalking into that reality, without fully reckoning with the consequences.