OpenAI confirms threat actors used ChatGPT to write malware

OpenAI has confirmed that its popular AI chatbot - ChatGPT was used by threat actors to write new malware and make existing ones more efficient.

OpenAI says it has cracked down on 20 plus campaigns that used ChatGPT to develop malware. (Express Photo)

OpenAI says it has cracked down on 20 plus campaigns that used ChatGPT to develop malware. (Express Photo)ChatGPT maker OpenAI recently confirmed that the generative AI-powered chatbot was used by threat actors to develop and debug malware. According to the company’s new threat intelligence report, it has”disrupted more than 20 operations and deceptive networks from around the world” since the start of the year that tried to leverage the power of its AI chatbot for malicious purposes.

Shedding light on how some threat actors increase the effectiveness of their operations, the first threat actor mentioned by OpenAI is “SweetSpecter”, which according to Cisco Talos analysts is a Chinese cyber-espionage group that targets governments in Asia.

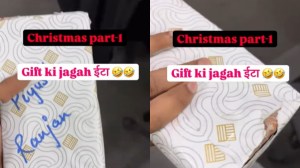

The ChatGPT maker said that the group targeted them by sending phishing emails with malware-laced ZIP attachments impersonating support requests to the personal email addresses of OpenAI employees. When opened, these attachments would drop the SugarGh0st RAT malware on the system. OpenAI also found out that SweetSpecter was using a bunch of ChatGPT accounts for scripting and vulnerability analysis.

The phishing email sent to OpenAI employees. (Image Source: Proofpoint)

The phishing email sent to OpenAI employees. (Image Source: Proofpoint)

Next up is “CyberAv3ngers”, a group associated with the Iranian Government Islamic Revolutionary Guard Corps (IRGC) which is known for marking industrial systems in critical infrastructure. This group reportedly used ChatGPT to generate default credentials in Programmable Logic Controllers (PLCs) and develop custom Python scripts and hidden code. OpenAI says CyberAv3gners also used ChatGPT to learn how to exploit certain vulnerabilities and steal passwords on macOS-powered machines.

Another Iranian group named “Storm-0817” used the AI chatbot to debug malware, develop an Instagram scraper and even develop a custom malware for Android devices, which can steal contact lists, call logs, files, browsing history and even take screenshots and offer real-time location of the victim.

Since then, OpenAI says it has banned all accounts used by the threat actors and while they do not give malware new capabilities, they give us an idea of how generative AI tools like ChatGPT can be used to boost the efficiency of malware campaigns and how people can use them to plan and execute these cyber attacks.