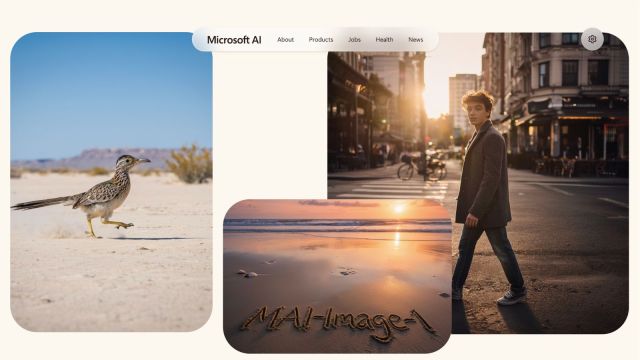

Microsoft unveils MAI-Image-1, its latest fully in-house image model

MAI-Image-1 is Microsoft's third purpose-built image model after MAI-Voice-1 and MAI-1-preview.

The model, according to Microsoft, excels at generating photorealistic imagery such as lighting, landscapes, and much more. (Express Image/Microsoft)

The model, according to Microsoft, excels at generating photorealistic imagery such as lighting, landscapes, and much more. (Express Image/Microsoft)Microsoft, on Monday, October 13, introduced its first text-to-image model developed entirely in-house. The model named MAI-Image-1 has been developed to empower creative workflows across its products. This is a big step in its push to offer services outside of OpenAI.

“Today, we’re announcing MAI-Image-1, our first image generation model developed entirely in-house, debuting in the top 10 text-to-image models on LMArena,” Microsoft said in its official post.

The new MAI-Image-1 reportedly optimises to produce photorealistic images along with instant generations. The model appeared among the top 10 on LMArena’s leaderboard right after its debut. In its official blog, Microsoft said that it has trained the model, intending to deliver genuine value for creators. For the uninitiated, LMArena is a crowdsourced platform that evaluates and ranks large language models.

“We put a lot of care into avoiding repetitive or genetically stylised outputs.” The tech giant said that it has prioritised rigorous data selection and nuanced evaluation that is focused on tasks closely reflecting real-world creative use cases. All of these have been done taking into account feedback from professionals in the creative industries. The model is designed to deliver flexibility, visual diversity, and practical value, according to the software giant.

The model, according to Microsoft, excels at generating photorealistic imagery such as lighting, landscapes, and much more. The MAI-Image-1 will be available in Copilot and Bing Image Creator soon. This is the company’s third purpose-built model after MAI-Voice-1 and MAI-1-preview. These models clearly show how Microsoft is working towards reducing its dependence on OpenAI.