Everything Adobe announced at Max 2025: Firefly Image 5, soundtrack generator, AI assistants

The creative software giant introduced the latest iteration of FireFly image-generation model with new layered image-editing and prompt-based editing capabilities.

All the announcements from Adobe Max 2025. (Image: Adobe)

All the announcements from Adobe Max 2025. (Image: Adobe)Adobe on Tuesday, October 28, unveiled a wave of new AI-powered tools and features that promise to reshape how artists, designers, and editors work. The announcements were made at the annual Adobe Max conference held in Los Angeles, California, United States.

The creative software giant introduced the latest version of its FireFly image-generation model with new capabilities such as layered image-editing and prompt-based editing. The web version of Adobe Firefly, an all-in-one platform for AI-assisted content ideation, creation, and production, has also been upgraded with new AI tools such as Generate Soundtrack and Generate Speech.

Its Creative Cloud apps such as Adobe Photoshop, Premier, and Lightroom are also getting some new AI-driven features, along with agentic AI assistants in Adobe Express and Photoshop.

Adobe has sought to define its edge in the AI race by emphasising that its Firefly AI models are trained on commercially safe, licensed data in contrast to applications developed by rivals like OpenAI, which is facing several copyright infringement lawsuits. In this way, Adobe is looking to walk a thin line between integrating generative AI across its product suite while ensuring that its community of designers are not thrown off by these newer experiences.

Here’s a look at the key announcements from Adobe Max 2025

Firefly Image 5: At Max 2025, Adobe took the wraps of its new Firefly Image Model 5 which has the ability to generate photorealistic images in four mega-pixel and two mega-pixel native resolution. It comes with new layered image-editing and prompt-based editing capabilities. The new layered image-editing feature in Firefly Image 5 decomposes user-uploaded assets into layers, allowing them to be moved around while re-projecting shadows and re-harmonising the image accordingly.

New partner models: Adobe users already had access to models from third-party developers such as Black Forest Labs, Google, Luma AI, OpenAI, and Runway. These partner models have been accessible through its apps like Firefly, Photoshop, and Adobe Express. The company has now announced new partnerships with ElevenLabs and Topaz Labs, enabling user access to their AI voice generation and image upscaling models, respectively. Assets generated through partner models will also carry Content Credentials, the industry-standard metadata type developed by Adobe to distinguish AI-generated content, the company said.

Firefly custom models: Last year, Adobe launched an AI foundry service that allowed enterprises to build custom versions of Firefly models. Now, the company has expanded this offering to more creators in private beta.

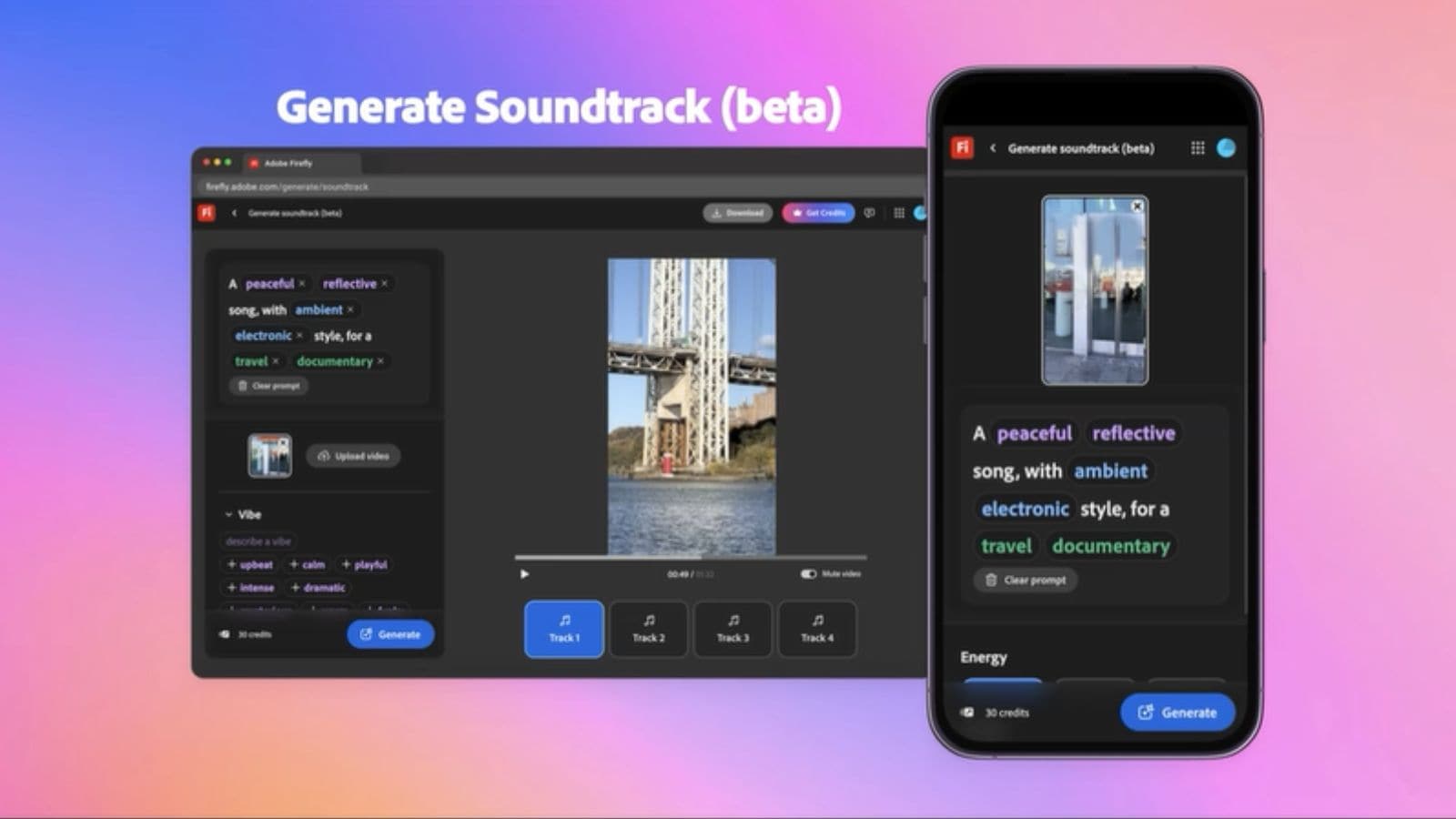

Generate Soundtrack: With this new feature, users can upload a video. The AI tool will predict and generate a soundtrack to go with it. It is the first AI tool with the capability to generate instrumental audio, Adobe said. The feature is available in public beta via the Firefly website. It is trained on commercially safe content so users do not have to worry about legal action, as per the company.

Adobe introduced a new AI feature called Generate Soundtrack at Max 2025. (Image: Adobe)

Adobe introduced a new AI feature called Generate Soundtrack at Max 2025. (Image: Adobe)

Generate Speech: It is another new AI-powered feature that can be used to generate and add voice-overs. Generate Speech has been released in public beta and can be accessed on the web version of Firefly. Adobe said that through its partnership with ElevenLabs, users have access to a large catalogue of voices. They can also fine-tune emotion and add emphasis to voice tracks.

Firefly video editor: It is a web-based multitrack timeline editor for generating, organising, trimming, and sequencing clips, with tools to add voiceovers, soundtracks, and titles. Creators can add all their Firefly creations in the Generation History panel, or generate new clips directly in the editor, with the ability to create start or end frames right from the timeline. They can also upload their own media to combine captured and generative content in one place. Firefly video editor has been launched in private beta on the Firefly platform.

Create for YouTube Shorts: Adobe has announced that it has teamed up with YouTube to introduce a new section in the Premiere mobile app, where creators can edit their videos using Adobe’s tools and directly publish it on YouTube Shorts with just one tap. These video-editing tools include exclusive effects, transitions, and templates that have been designed specifically for YouTube Shorts. Users can also visit the new section from the YouTube app by tapping on the “Edit in Adobe Premiere” icon in Shorts.

Prompt to Edit: It is a conversational editing interface that lets users describe the edits they want to make to an image in everyday language. Prompt to Edit is available within Firefly Image Model 5, and has been integrated with partner models from Black Forest Labs, Google, and OpenAI.

New AI assistant in Adobe Express: The AI assistant in Adobe Express allows customers to easily generate images, change backgrounds and text, and replace objects, without having to know which tool or model to use or which steps to take. The AI assistant can also interpret vague or subjective requests and generate new designs, Adobe said.

Project Moonlight: Adobe also previewed an AI assistant, codenamed Project Moonlight, that will have the ability to move across Adobe apps, learn from assets, and help creators ideate.