Instagram will show ‘potential’ hate speech lower down in your Feed, Stories

It will now show these lower down in a user's Feed and Stories, and this decision will be based on the user’s past history of reporting content.

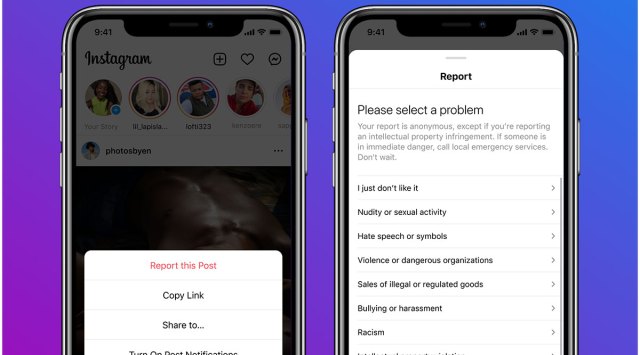

Instagram will now push posts that may contain bullying, harassment or a call to violence, further down your Feed or Stories to reduce engagement. (Image credit: Instagram)

Instagram will now push posts that may contain bullying, harassment or a call to violence, further down your Feed or Stories to reduce engagement. (Image credit: Instagram) Instagram has announced some new measures to tackle the problem of hate speech, and ‘potentially upsetting posts’. It will now show posts that may contain such content lower down in a user’s Feed and Stories, and this decision will be based on the user’s past history of reporting content. The Meta-owned app says this is part of its effort to take stronger action against “posts that may contain bullying or hate speech, or that may encourage violence.”

The post adds that these new actions will only impact individual posts, not accounts overall. So the account will not get penalised as a whole, but the individual post will if it falls in this zone. While Instagram does remove posts that break its rules outright, this approach is for posts that are not breaking the rules outright. The move will presumably reduce the reach and engagement of such content, though there is plenty of evidence from past history that such an approach typically does not work.

Instagram has previously focused on showing posts lower on Feed and Stories if they contain misinformation as identified by independent fact-checkers. Or if they are shared from accounts that have repeatedly shared misinformation in the past. The new efforts are extending this approach further.

In order for its systems to detect if the post may contain ‘bullying, hate speech or a call to violence” it will take a closer look at aspects like the caption of the post. This will then be compared to a previous caption that might have broken the rules on Instagram. Further, it will also rely on user reports and the past history of these reports to decide which content is likely to offend the user.

Remember, the posts on your Instagram feed are based on how likely you will interact with it, and what the algorithm thinks you might want to see more of. A similar approach will now be taken for problematic content. The app will “also consider how likely we think you are to report a post as one of the signals we use to personalise your Feed. If our systems predict you’re likely to report a post based on your history of reporting content, we will show the post lower in your Feed,” according to the post.

Instagram has in the past tried to shed some clarity on how and why users end up seeing what they do on their Feed and Stories. In a previous post, Instagram head Adam Mosseri had clarified that the company does not have one ‘algorithm’ to decide what people see and don’t see on the app. “We use a variety of algorithms, classifiers, and processes, each with its own purpose,” he had written.

According to the post, Feed, Explore, and Reels all use their “own algorithm tailored to how people use it,” and different aspects of the app are ranked differently. Instagram also takes “the information” it has “about what was posted, the people who made those posts, and your preferences,” which are called ‘signals’ when deciding how your Feed or Stories section ends up looking.

The most important signals across Feed and Stories are information about the post, the user’s activity, and the user’s history of interacting with someone. The predictions for how the content appears on the Feed and Stories are made based on that. Now, these same signals are also going to be used when deciding what content should end up lower on the feed because it likely has hate speech or bullying content and the user might report it.

Also, Instagram does not take down posts that have misinformation. This is similar to the Facebook approach where the post is fact-checked by a third party and labelled for being either fully misleading or partially misleading. Instagram also says that if someone has “posted misinformation multiple times,” it may make all of the content harder to find.