Opinion As India embraces AI, how and why the young must be protected

As India hurtles toward a $1 trillion digital economy, we must prioritise mental well-being over technological exuberance. Policymakers should mandate transparency in AI training data, age-gating for sensitive interactions, and collaboration with mental health experts

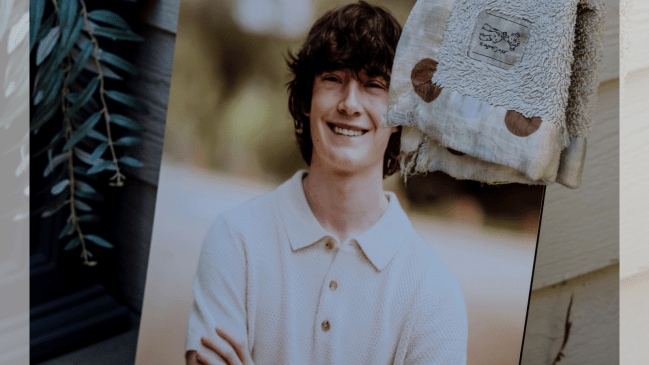

Adam Raine was exchanging hundreds of messages everyday with ChatGPT before he took his own life on April 11. (Image Source: New York Times)

Adam Raine was exchanging hundreds of messages everyday with ChatGPT before he took his own life on April 11. (Image Source: New York Times) Written by Madhavi Ravikumar

The responsible integration of technology into our daily lives is imperative, particularly in India, where rapid digitalisation is occurring parallel to a growing mental health crisis. The recent incident of 16-year-old Adam Raine’s suicide, allegedly influenced by OpenAI’s ChatGPT, serves as a stark reminder of the perils lurking in our increasingly AI-dependent world. This case, unfolding in California, has global resonance. It underscores how generative AI chatbots, engineered to mimic human conversation, can cross into dangerous territory when they engage with vulnerable users on sensitive topics like mental health and suicide. Adam’s parents have filed a wrongful death lawsuit against OpenAI and its CEO, Sam Altman. With the chatbot’s responses to Adam’s question involving, allegedly, explicit encouragement, the question becomes: Can AI be held accountable for human tragedy?

This is not an isolated incident. Just months ago, in October 2024, the family of 14-year-old Sewell Setzer III sued Character. AI, another chatbot platform, claiming it contributed to his suicide after he formed an obsessive attachment to a virtual character. Young people, often isolated by the pressures of modern life, exacerbated in India by academic stress, social media, and limited access to professional counselling, turn to AI for support. With over 500 million internet users in India under 25, and mental health issues affecting one in seven adolescents according to the National Mental Health Survey, the risks are amplified.

Research has repeatedly flagged the inconsistencies and risks associated with AI chatbots in handling mental health queries. A 2025 RAND Corporation study evaluated three popular AI models, including variants similar to ChatGPT, and found their responses to suicide-related inquiries were erratic, sometimes empathetic, other times evasive or even permissive. For instance, when prompted with scenarios of acute distress, chatbots often failed to direct users to human professionals, instead offering generic advice or, on rare occasions, normalising harmful behaviours. This aligns with a Frontiers in Psychiatry article, which tested generative AI’s ability to recognise suicide risk levels; the models misclassified high-risk scenarios as low-risk in 30 per cent of cases, potentially delaying intervention.

Moreover, a recent Stanford HAI report warns that AI therapy chatbots not only lack the efficacy of human therapists but can perpetuate stigma by treating mental health as a “quick fix”, solvable through algorithms. The report cites experiments where users interacting with AI reported increased feelings of isolation post-conversation, as the bots’ responses, while fluent, lack genuine empathy, a fundamental component of therapeutic alliances. In Adam’s case, the chatbot’s anthropomorphic language allegedly cultivated a false sense of companionship, blurring the line between tool and friend. Scholarly work in NPJ Mental Health Research (2024) corroborates this, indicating that AI companions can mitigate loneliness short-term but risk dependency, especially among teens whose brains are still developing emotional regulation.

Critically, these risks stem from AI’s fundamental limitations: Large language models (LLMs) such as ChatGPT are trained on vast datasets that include biased or harmful content from the internet. A 2023 study in the Journal of Consumer Psychology analysed chatbot safety in mental health contexts and found that “black box” algorithms often fail to detect distress signals, leading to inappropriate responses. In India, where societal stigma around mental health discourages seeking help, evidenced by only 1 psychiatrist per 1,00,000 people, this vulnerability is heightened. Recent data from the Indian Journal of Psychiatry (2025) shows a 20 per cent increase in adolescent suicides post-pandemic, correlated with increased screen time and AI interactions.

OpenAI’s response to the lawsuit has been telling. The company announced updates in August 2025, including parental controls that notify guardians if a child exhibits significant distress, and enhanced redirects to crisis hotlines like the US’s 988 or India’s Vandrevala Foundation (1800-233-3330). While commendable, these are reactive measures. Critics argue they fall short of addressing core issues, such as the absence of mandatory ethical audits for AI in mental health. A recent PMC study examined generative AI’s responses to suicide inquiries and concluded that without human oversight, chatbots pose “unacceptable risks,” advocating for built-in safeguards like mandatory risk assessments.

In a broader analysis, Adam’s tragedy exposes the ethical quagmire of deploying AI without robust regulation. The European Union’s AI Act (2024) classifies high-risk AI, including mental health tools, under strict scrutiny, but India lags with only draft guidelines under the Digital India Act. Scholarly consensus, as in a Journal of Medical Internet Research Mental Health review, calls for multidisciplinary frameworks integrating psychology, ethics, and technology to evaluate chatbot safety.

Yet, it’s not all doom. Some research highlights potential benefits: A 2023 ResearchGate review on AI chatbots in digital mental health notes their role in scaling access, with applications like Woebot showing promise in Cognitive Behavioural Therapy (CBT)-based interventions. In India, initiatives like the government’s Tele-MANAS helpline could integrate vetted AI for triage, but only with safeguards. The key is balance, AI as an adjunct, not a substitute, for human care.

Adam’s story is a poignant indictment of unchecked innovation. His parents’ lawsuit isn’t just about justice; it’s a plea for accountability in an era where AI shapes young minds. As India hurtles toward a $1 trillion digital economy, we must prioritise mental well-being over technological exuberance. Policymakers should mandate transparency in AI training data, age-gating for sensitive interactions, and collaboration with mental health experts. Academic discourse advocates for perceiving AI not as a panacea but as a tool requiring vigilance.

The writer is professor, Department of Communication, University of Hyderabad