‘Chatgpt is just humans’: Reddit user shares typo by GPT-4, sparks jokes

A Reddit user shared a screenshot of a conversation with GPT-4 showing the chatbot made a typographical error while answering a question.

GPT-4, an advanced version of ChatGPT, was launched in March this year.

GPT-4, an advanced version of ChatGPT, was launched in March this year. Ever since ChatGPT was launched in November last year by OpenAI, it has caught the fancy of netizens. From writing essays to coding with the latest documentation, users have been left amazed by what they can accomplish using the chatbot.

After GPT-4, an advanced version of ChatGPT, was launched in March this year, people felt it would scale unimaginable heights. However, even GPT-4 isn’t immune to making the occasional error.

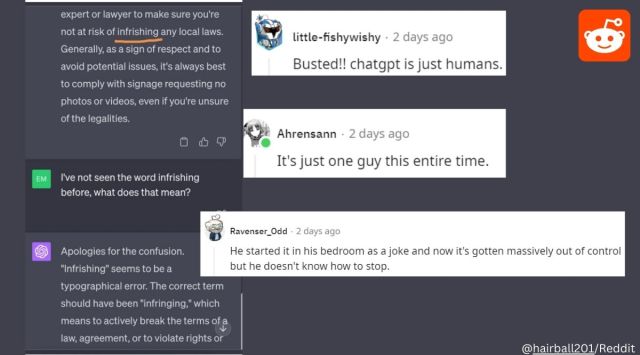

A Reddit user shared a screenshot of a conversation with GPT-4 showing the chatbot made a typographical error while answering a question. Answering a query on “pet shop recording concerns”, the chatbot spelled the word “infringing” incorrectly and wrote “infrishing any local laws” in the answer.

However, when the user asked what the word “infrishing” meant, the chatbot corrected the mistake. “Apologies for the confusion. “Infrishing” seems to be a typographical error. The correct term should have been “infringing,” which means to actively break the terms of a law, agreement, or to violate rights…” it said.

Read the post here.

The Reddit thread was full of jokes as a user said the chatbot “is just humans”.

“Biggest fraud in history,” wrote another. “When it says chatGPT has reached capacity it’s really just him taking a nap,” joked another one. “He started it in his bedroom as a joke and now it’s gotten massively out of control but he doesn’t know how to stop,” another person posted sarcastically. “Well typographical errors are patterns in natural languages. so pretty natural for it to pick up that,” another netizen said.

- 01

- 02

- 03

- 04

- 05