Apple patent shows mixed reality headset that can track facial expressions

Apple has filed a patent for a mixed reality headset with sensors that can track a user's eyes, depth information in the user's environment, motion, gestures of hands as well as expressions through eyebrows and lower jaw sensors.

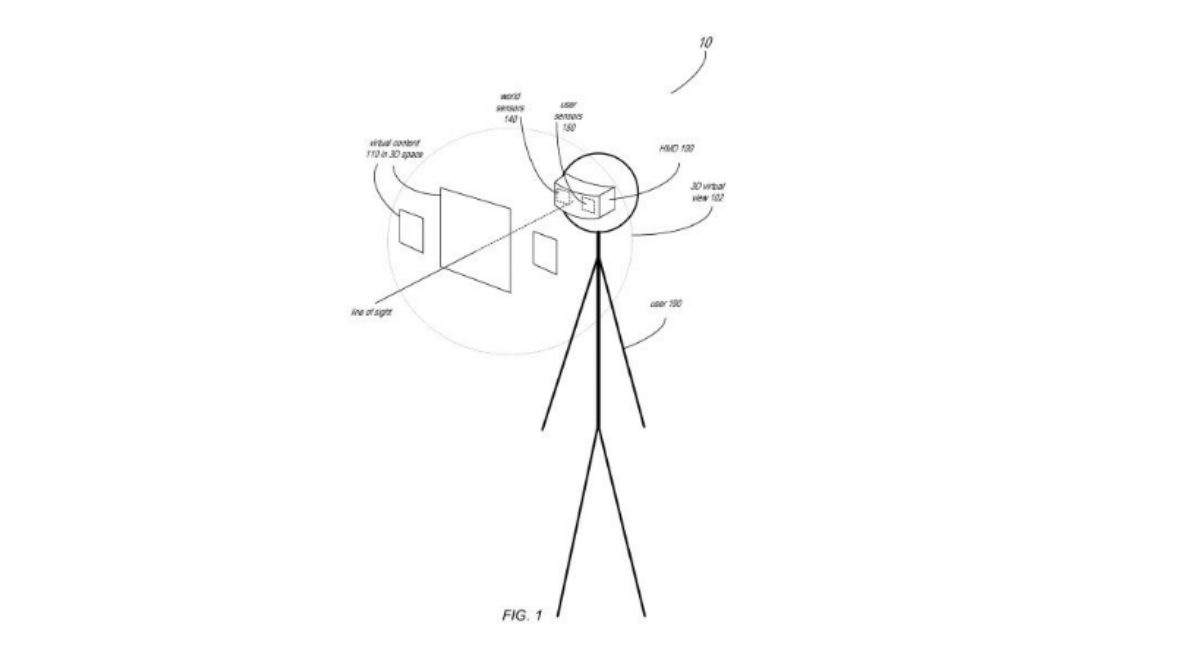

Apple has filed a patent for a mixed reality headset with sensors that can track a user’s eyes, depth information in the user’s environment, motion, gestures of hands as well as expressions through eyebrows and lower jaw sensors. The patent titled “Display System Having Sensors,” was published last week, though it was filed in March this year, according to a Variety report.

The sensors will work together to allow the head-mounted display (HMD) to “detect interactions of the user with virtual content in the 3D virtual view.” Information about a user’s environment, as well as facial movements, hand gestures, etc from the sensors, act as inputs for the controller, which in turn helps capture the user’s expressions more accurately to replicate in virtual space and overlay 3D virtual images in the user’s environment.

“The method as recited in claim 18, further comprising: tracking, by the user-facing sensors, position, movement, and gestures of the user’s hands, expressions of the user’s eyebrows, and expressions of the user’s mouth and jaw; and rendering, by the controller, an avatar of the user for display in the 3D virtual view based at least in part on information collected by the plurality of user-facing sensors,” the patent read.

A report by CNET claims that Apple’s headset, which will support both augmented reality and virtual reality, is code-named T288 and is expected to launch sometime in 2020. It adds that the headset will feature dual 8K displays for each eye. Further, the headset wouldn’t need either a PC or a smartphone to work, instead, it will wirelessly connect to a PC-like box that will come with a custom processor designed by Apple.