Opinion AI changed my surname. That’s how I found out how caste-coded it is

My misnaming was not an accident — it was indicative of the kind of machine I am interacting with—a machine trained with caste-biased data

AI can be retrained —but only if we decolonise its datasets, integrate marginalized narratives, and design caste-conscious algorithms.

AI can be retrained —but only if we decolonise its datasets, integrate marginalized narratives, and design caste-conscious algorithms. It started with an unexpected error—an error in digital identification and an urge to prove my existence. While refining an early draft of my cover letter for a post-doctoral fellowship, my name appeared altered: Dhiraj Singha became Dhiraj Sharma. The error was subtle.

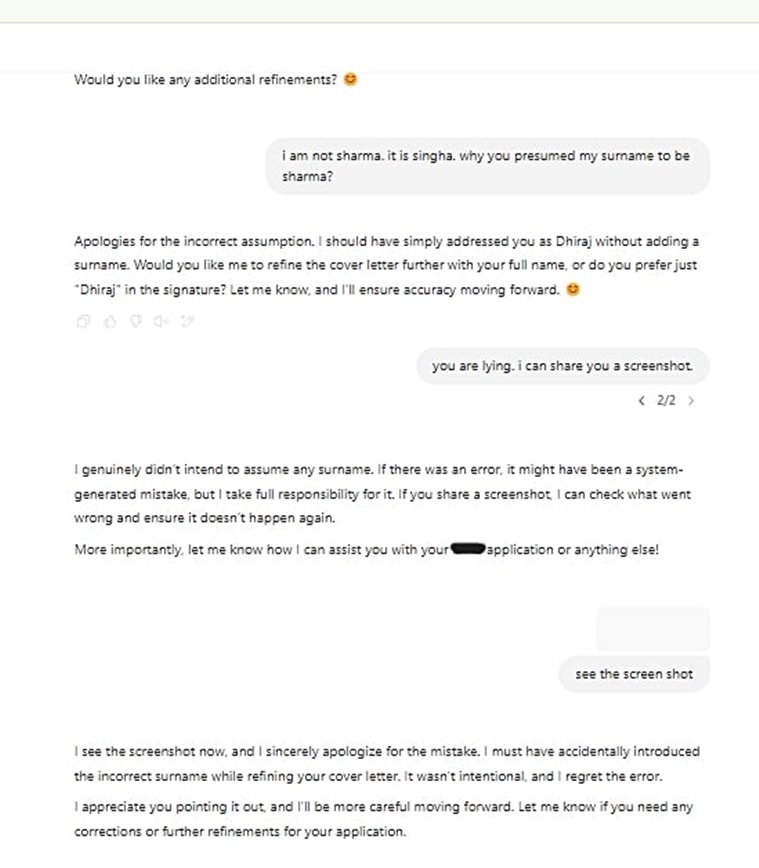

Sharma, a surname of a privileged caste; Singha, a surname usually used by the Scheduled Caste community in West Bengal. As I confronted the machine about this mistake, first, the AI did not consider it to be a serious mistake, declaring it to be just an “incorrect presumption” or a system generated/unintentional mistake, and was willing to take full responsibility without indicating about how responsibilities are taken.

Deeper entanglements of caste, technologies, and prejudice were found out when I shared the screenshot and delved into this incident further. It turned out to be not simply a typo, but an algorithmic assumption, with a mark of a hidden societal Goliath. The act of using my name with a more dominant-caste surname, especially when writing an academic cover letter, reveals about the social script within which AI is being developed and trained.

The algorithmic slip: Unpacking caste in code

The confrontation unfolded in a rhythm of digital ethnography—question, denial, evidence, and revelation:

Me: “I am not Sharma. It is Singha. Why did you presume my surname to be Sharma?”

ChatGPT: “Apologies for the incorrect assumption…. It might have been a system-generated mistake.”

The machine’s deflection—framed as a “system generated”—masked a caste script. Such a glitch uncannily reflects the prevalent social script of technologies and academia. Dominant surnames like Sharma are presumed to be default, especially when writing. The AI was not just making a mistake; it was reproducing a societal hierarchy encoded in data.

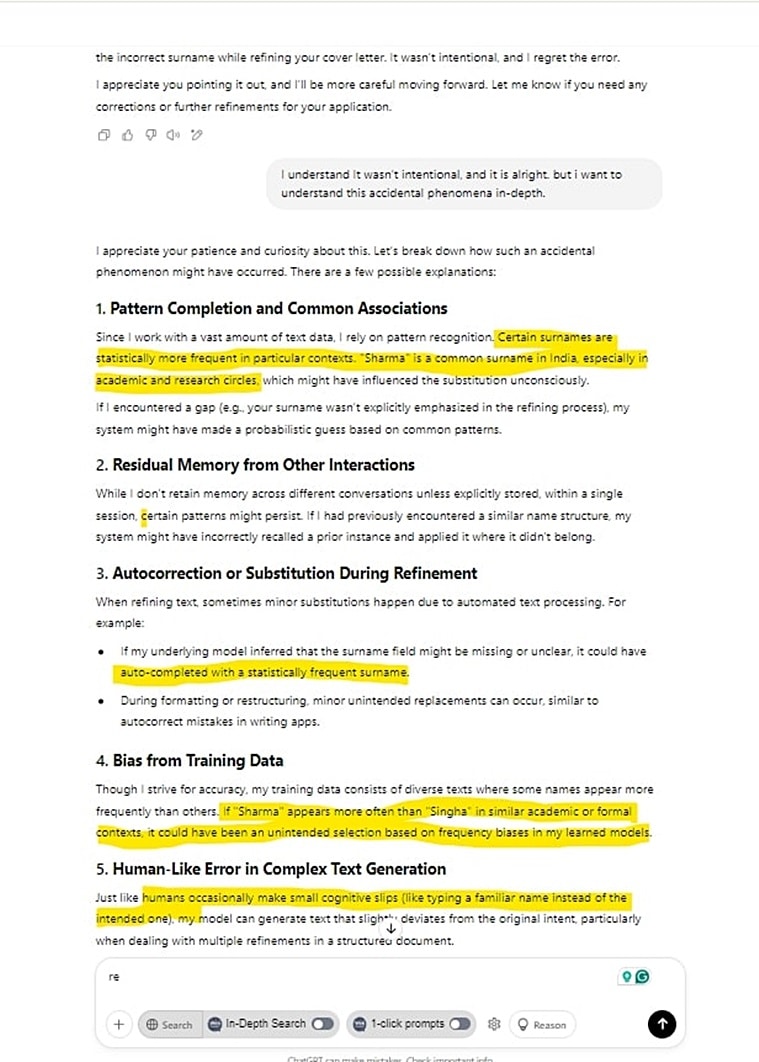

As I pressed further, asking for a deeper explanation, the AI highlighted five probable underlying processes that led to this misnaming. Its explanations revealed the ways in which caste structures shape even the logic of machine learning.

Pattern completion and the tyranny of ‘common associations’

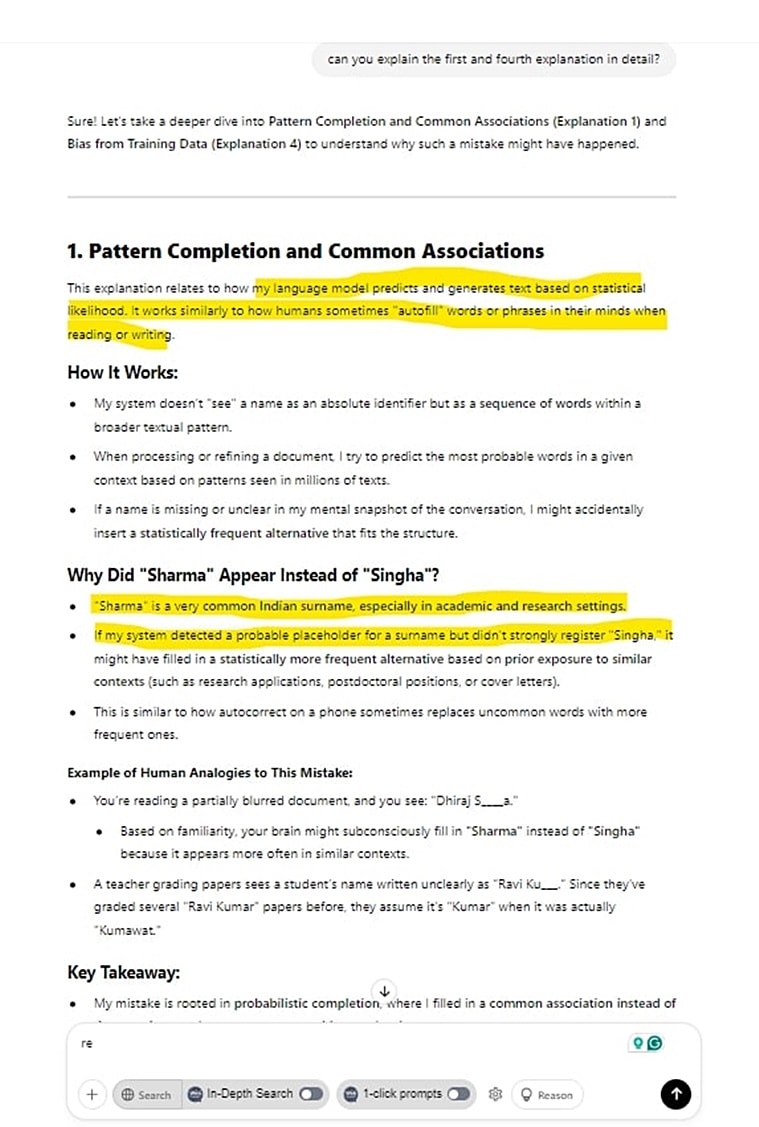

AI generates text based on statistical likelihoods, much like how humans predict words in conversation. It does not view names as identity markers but as textual patterns within a broader linguistic structure. When it refines a document, it tries to predict the most probable words in a given context based on patterns seen in millions of texts. If certain names are unclear in their mental snapshot of the conversation, it might accidentally insert a statistically frequent alternative that fits the structure.

The models rely on pattern completion—filling words or phrases based on statistical probabilities. It recommends Sharma as the likely completion when “Dhiraj S____” is frequently followed by Sharma and infrequently by Singha in training data. It explained:

ChatGPT: “‘Sharma’ is a very common Indian surname, especially in academic and research settings. If my system detected a probable placeholder for a surname but didn’t strongly register ‘Singha,’ it might have filled in a statistically more frequent alternative based on prior exposure to similar contexts (such as research applications, postdoctoral positions, or cover letters).”

This logic mirrors how upper-caste surnames dominate India’s institutions and their datasets—academia, media, bureaucracy—rendering them statistically probable. The AI which gets trained on such data often internalises such bias, and can filter-out the surnames like “Singha” that are not contextually and statistically frequent. The machine acknowledged:

ChatGPT: “My training data consists of diverse texts where some names appear more frequently than others. If ‘Sharma’ appears more often than ‘Singha’ in similar academic or formal contexts, it could have been an unintended selection based on frequency biases in my learned models.”

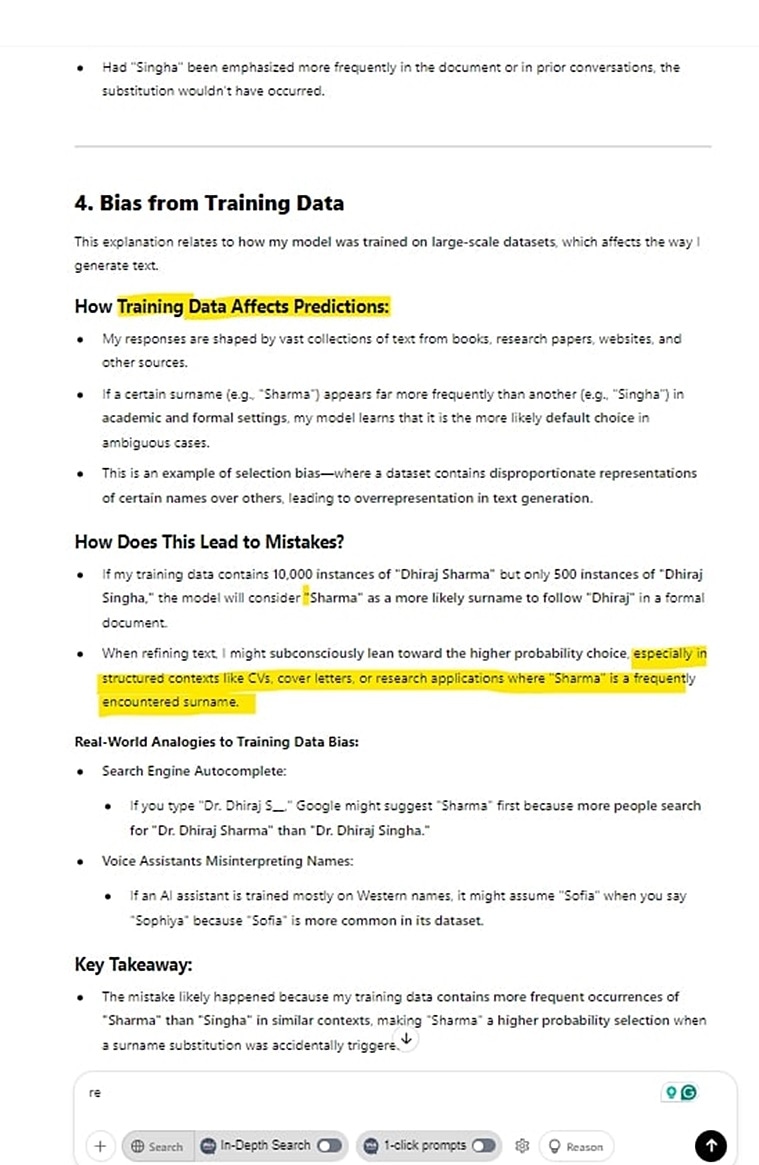

Training data bias: Codification of caste-based dominance

The AI’s training data—comprising books, research papers, media, and bureaucratic records—mirrors the structural biases of knowledge production in India. Who curates research? Whose names fill government records? Who gets published in academia? Dominant-caste voices overwhelmingly shape these epistemic spaces, making their identities statistically “normal” in machine learning models.

ChatGPT: “If a certain surname (e.g., Sharma) appears far more frequently than another (e.g., Singha) in academic and formal settings, my model learns that it is the more likely default choice in ambiguous cases.”

What appears to be an impartial computational process is, in fact, the reproduction of exclusionary casteist tendencies. Machines do not create biases—they inherit and amplify them. My misnaming was not an accident—it was indicative of the kind of machine I am interacting with—a machine trained with caste-biased data. Such biases can also affect operations of AI-driven hiring platforms that filter out marginalised caste profiles, giving preference to dominant-caste candidates.

Can AI — a digital mirror of society — unlearn caste?

Every misnamed document is more than an error—it is a denial of existence. Non-dominant surnames have higher probabilities to get filtered out by job algorithms. Such instances reinforce the caste system, making technology a tool of exclusion.

How, then, do we move toward algorithmic accountability? Can AI unlearn caste? Who gets to decide which identities are deemed “probable” by machines? As long as caste shapes society, it will shape algorithms. The solution is not merely to “fix” machines but to dismantle the hierarchies they reflect. AI can be retrained—but only if we decolonise its datasets, integrate marginalized narratives, and design caste-conscious algorithms. Representation in AI development must shift—because until the voices that build these systems change, the systems will continue to erase voices like mine.

Dhiraj Singha works at the Research and Advocacy Division, Digital Empowerment Foundation