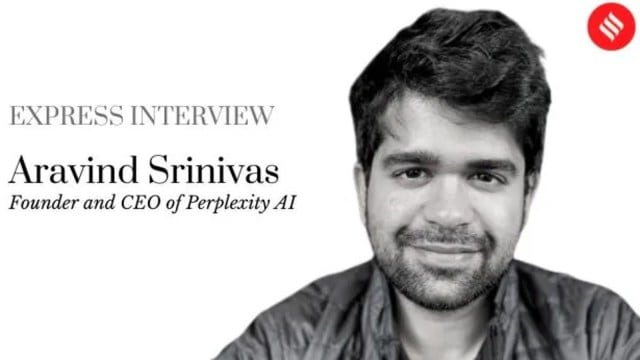

That’s Aravind Srinivas, founder and CEO of Perplexity AI, an IIT Madras graduate who’s making waves in Silicon Valley pitted in a David vs. Goliath slugfest against titans Microsoft and Google.

Speaking to The Indian Express from his home in San Francisco, Srinivas says he’s positioning Perplexity as a “conversational answer engine” to differentiate it from competition.

Story continues below this ad

Having previously worked at DeepMind, Google, and Microsoft-backed OpenAI, Srinivas says that Perplexity’s USP is its attempt to re-imagine the future of search by delivering clear personalised answers cutting through the noise on the web that traditional search engine users have to navigate.

Excerpts from an interview with The Indian Express:

How does Perplexity differentiate itself from competitors like OpenAI and Google Bard, and what do you think is the future of generative AI?

Fastest, most accurate and user friendly product. How we have achieved that is by crawling the web, pulling the relevant sources, only using the content from those sources to answer the question, and always telling the user where the answer came from through citations or references. The way we achieved the speed was through a lot of work on product and backend engineering as well as building our own custom inference infrastructure for LLM (large language model) models in partnership with Nvidia (an investor in Perplexity AI), and also crawling the web ourselves and building the search layer and infrastructure ourselves.

About the future of generative AI, you have to think about what are the current experiences that are already possible, and are going to become a lot more reliable. We already have good copywriting tools, question answering tools, image generation tools, code generation tools, and all of these things are going to become a lot more reliable… In terms of what is not possible today, there are some clear examples – video generation for example. We are not able to reliably generate a 10 second clip for a text description but I think that will definitely change this year. (Days after the interview, OpenAI launched its Sora model which can generate minute long videos based on textual prompts.)

Story continues below this ad

We also do not have agents – which is basically AI that doesn’t just answer questions for you, but actually goes out there and does tasks for you – filling up your forms, taxes, booking reservations etc.

A number of search engines tried to unsuccessfully challenge Google’s dominance in Internet search by essentially replicating the Google search model and failing as a result. Do you think with generative AI, where OpenAI has taken the lead, product differentiation is a possibility?

Google is a very interesting example, because they grew by paying for themselves to grow. They figured out this Adwords business model, which enabled them to revenue-share with a lot of people. But this current scenario is different because it actually disrupts the old system, you do not need to look at the links anymore, for instance. It is also very capital intensive, to train and serve these models at such high costs. You’re creating a model where people are paying for the service and subscriptions are generally a lower margin and lower growth business than advertising… That is where I think all the AI companies, including OpenAI and us, are yet to figure out a more profitable path to growth…

Web 2.0 companies like Google and Facebook were built on safe harbour protections. But with generative AI, do you think that immunity makes sense? In India we had an issue where Bard refused to summarise an article from a conservative outlet, but Google said it was not their viewpoint…

In the US, it is understood that those protections do not apply to AI generated content. So, you can actually sue Google or Facebook, if an AI that they own is saying anything incorrect, about any individual or any, any organisation, or hurts any section of the population. In terms of the specific incident you mentioned, what do you expect from Google? They try to be very politically diplomatic, and try to lean on not hurting people at the cost of not giving the right information, even if there is an alternative viewpoint… An ideal AI would give you all kinds of viewpoints and it is up to a user to go read all of them. That, or the model can say that it does not want to answer a certain question. But it is not supposed to say that it’s not a good thing to ask about certain things.

What are the advantages of a wrapper model versus those who are doing work on foundational training models? Are there concerns for you regarding potential business risk?

As a startup, your only job is to basically build a product and get some kind of user base. What the product market fit is can be argued, whether it’s in terms of how many users you have, what is the revenue, or if you have a moat that nobody else can offer. If you build a product that is being used by millions of people, you don’t have much else to worry about. You don’t have to build a model, or do the indexing, because even if you did but had no users, then what is the value of your model? This is something I’ve taken a lot of inspiration from Jeff Bezos here, which is, there was a point where people kept asking him about Amazon’s moat given that they were using existing systems… As for Perplexity, whether we are a wrapper or we serve our own model, no user really cares. What they want is if it gets them good answers really fast and accurately.

Story continues below this ad

News publishers are clearly unhappy about AI bots scraping their archives. Questions around intellectual property have been raised, and the New York Times has already sued OpenAI and Microsoft over it. Do you think the relationship between publishers and AI companies will get more adversarial from here, or is there scope for collaboration?

For us, it’s not adversarial at all. We do not take the content from any publisher and train models on that data. We only build on top of existing models and API’s and try to offer a service where we are providing more high bandwidth access to the information on your medium to the end user. But we also make them aware that the information is coming from you through citations to sources, so that the user is fully aware of where they’re actually reading. If there is a part of the answer that came from the Indian Express, they clearly are shown the logo and a link at the top, and they can click on it and just get there. We believe it’s an even more efficient way to let the end user know what you’re writing on your site, versus just having it randomly ordered in a bunch of links, and having you worry about SEO targeting and AdWords.

But since mediums like Perplexity already show an upfront summary for a search query, don’t you think that the click through rates for news websites are going to fall down significantly?

Definitely, it’s going to drop and I would be lying if I said otherwise. But what I’m trying to say is that the click through on a platform like Perplexity where users already see a summary, is going to be a lot more higher intent. It should not be seen in the same way as click through on Google, because users have already seen a summary of what they want but despite that if they click through, it means they are impressed by your content. The value of that referral traffic is even higher.

On AI regulation, people like Sam Altman have called for a global consensus. But on the other hand, you have individuals like Meredith Whittaker (from Signal Foundation) who believe these calls are a facade to stall conversations around regulation. How do you view that dichotomy?

It is very premature to think of AI regulation today. It is very self serving for people with a lot of capital and compute infrastructure wanting to get AI regulated before startups that might want to have a say on how it should be regulated are able to get a seat on the table. It is sort of anti democratic, I would even say that, because you’re not able to get everybody’s voices heard. And eligibility for people to even be in these conversations, includes those who have raised billions of dollars of venture or corporate funding. Now, the other thing is also worth thinking about is, why are they very concerned? If it is so dangerous, wouldn’t you want more eyeballs and voices on this? Isn’t it hypocritical to say that this thing is so dangerous, also let us continue to build it, and trust us to make it safe. And also trust only us…

Whether regulation is totally unacceptable, and we should never regulate, I think that’s not true either… Deepfakes should be regulated, audio using someone’s voices to fake them and misuse it for bank frauds should be regulated… A global collaboration is useful in the sense that we do want a roughly similar set of principles for all the countries … you don’t want India to allow some use case that America doesn’t allow. From that perspective, I think educating world leaders about AI, and ways in which AI can be misused is totally worth it.

Story continues below this ad

The mainstream generative AI conversation has so far largely included companies from countries in the democratic world. But China has ambitions in the space too. How do you see Beijing’s influence in the space shaping up?

China is definitely going to have a lot of advantage in the fact that they can have the government sponsor the centralised LLM that is being distributed through a centralised everything app like WeChat – everybody interacts within one platform. But that is also going to be their weakness, in that everything will be centralised and diverse experiences will not be made possible. So in terms of global sovereignty, it’s great for them, but in terms of consumer experiences, and diversity of experiences is not going to be that good. They can build amazing models, but I think they are crippled due to America’s sanctions on exports of powerful GPUs to China. But that could also end up driving innovation in China… Another type of AI where China will be quicker than the US is physical robots, they will commoditise the market and make it super cheap and even export it to the US.

Is there clarity on what could be the revenue model for generative AI platforms like Perplexity going forward?

We will continue to have the free version all the time. The paid version will be something that not everybody goes for, and we are completely okay with that. We would love to figure out ways to monetise even the free version, and there are great versions of companies like YouTube who have successfully done something similar. Amazon Prime does pretty well in terms of different tiers of payments… But we do not want to end up in the same position as Google where we say that we care about knowledge and then end up doing ads and hurting the truthful answer, which is what the user comes to Perplexity for.

Access to computing capacity is a big element in building these large models. Do you think for start-ups and companies in India that will be a big challenge, because they may not have ready access to such cutting edge hardware?

It’s not fair to say India hasn’t tried to make its own hardware. For example, the Shakti microprocessor has been built there. But it is fair to say that in terms of hardware accelerators which will make your matrix multiply a lot faster, that is where India has not made a big development yet, though people are trying.

It (large language model training) is just very capital intensive… and talent is also a very big reason. There are only a few people in the world who really know how to train these very large language models – the art of curating data, and carefully running the evaluation metrics, and making sure these models are generalisable. There are actually very few people in the world who know how to do all this and most of those people are working in the United States, and most of them are working in a handful of companies, including OpenAI, DeepMind, Anthropic, Meta, and Mistral. So the knowledge of how to train a model that has GPT 4 level capability is just very concentrated in these few companies.

Story continues below this ad

The hope is that a company like Meta has outlined that it wants to open source all these models and support the ecosystem will likely benefit a lot of start-ups in the US and India who don’t want to train these models themselves. They can take these base models and try to build on top of them, bootstrapping off them… I think that inference hardware will likely come out from India, maybe not training hardware, it’s very hard to compete with Nvidia on training hardware. The thing with AI hardware is that it’s coupled with the software. For instance, Nvidia GPUs are coupled with the CUDA libraries, which are needed to make good use of the hardware. And it’s something that has existed for many decades now. So that’s why Nvidia has a big advantage, and I don’t see any changes there.

But, in terms of training clusters, I think we will see some inference hardware though. Once you have the model, taking that and serving it to a lot of people, it just requires you to optimise that the matrix multiplies really efficiently, and that can potentially be done in a custom chip. A lot of people are actually trying to take on Nvidia there, and India can also potentially contribute there.

With Nvidia launching the Chat with RTX, we see hardware companies also getting into AI software. How do you see these intersecting lines?

My guess is that people who are getting at the scale of OpenAI in terms of serving consumer products that run on GPUs definitely want to save costs. It’s against the incentives of the person that’s selling the chips, because they obviously want to sell the chips at as high margins as possible and keep increasing their market cap. Similarly, the person selling the chips is also interested in benefiting from products that are built on top of it… They own the next generation of chips and can build even better and efficient products.

For decades now, online publishers have had to contend with SEO to ensure that they land on top of Google Search results. With generative AI platforms search engines becoming more common, how would they have to optimise their content differently?

At the end of the day, the model is just looking for good content. It’s a slightly different ranking – it is not just based on how many people click on your link, but is based on what the large language model thinks is relevant on your webpage to the query to the person asked. It’s very weird to think about it in the sense of using keywords to make your content relevant because it will not function that way anymore… You need to think about it differently in the sense that, instead of seeing how I get my link displayed more often, it should be like, if there was a query, how do I make sure my story was definitely used.