The prolific rise of social media platforms has put child safety at risk. Across the globe, numerous cases of child safety issues have been reported, flagged, and companies have swung into action to mitigate these issues. On Wednesday, Meta announced its participation in the Lantern programme to assist companies in sharing signals about accounts and behaviours that violate their child safety policies.

The Mark Zuckerberg-led tech giant is a founding member of Lantern, which, according to it, is the first child safety cross-platform signal-sharing program. Meta’s involvement with the Lantern programme puts the spotlight on the significance of policy guardrails implemented by tech companies and overseeing compliance and redressals in case of violations. Beyond policy oversight, measures to safeguard children from online abuse are paramount.

Meta has been using technology such as Microsoft’s PhotoDNA and Meta’s PDQ to prevent the spread of child sexual abuse content on the internet. However, the social media giant has acknowledged the need to have additional solutions to stop predators from accessing multiple apps and websites to target children. “At Meta, we want young people to have safe, positive experiences online, and we’ve spent a decade developing tools and policies designed to protect them,” the company said in its official release.

To understand what Lantern stands for, it is important to know its founders and the philosophy.

What is the Lantern programme?

The Lantern programme was launched by Tech Coalition, a global alliance of tech companies that have collaborated to fight child sexual abuse and exploitation online. “We work to inspire, guide, support our industry members, helping them work together to protect children from online sexual exploitation and abuse,” reads the official website of the coalition.

The coalition has the onus of aligning global industry by accumulating knowledge, upskilling its members and strengthening all links in the chain. This is to ensure that even the smallest startup has access to the same kind of expertise and knowledge as the tech giants in the world. “We envision a digital world where children are free to play, learn, and explore without fear of harm,” read the ‘Our Values’ section on the website.

Why is there a need for Lantern?

The first-ever cross-platform signal-sharing programme has been created to help companies to enforce their child safety policies. According to the coalition, online child sexual exploitation and abuse (CSEA) is a pervasive threat that can operate from across platforms and services. The program identifies ‘inappropriate sexualised contact with a child,’ also known as online grooming and financial sextortion of young people, as imminent dangers.

Story continues below this ad

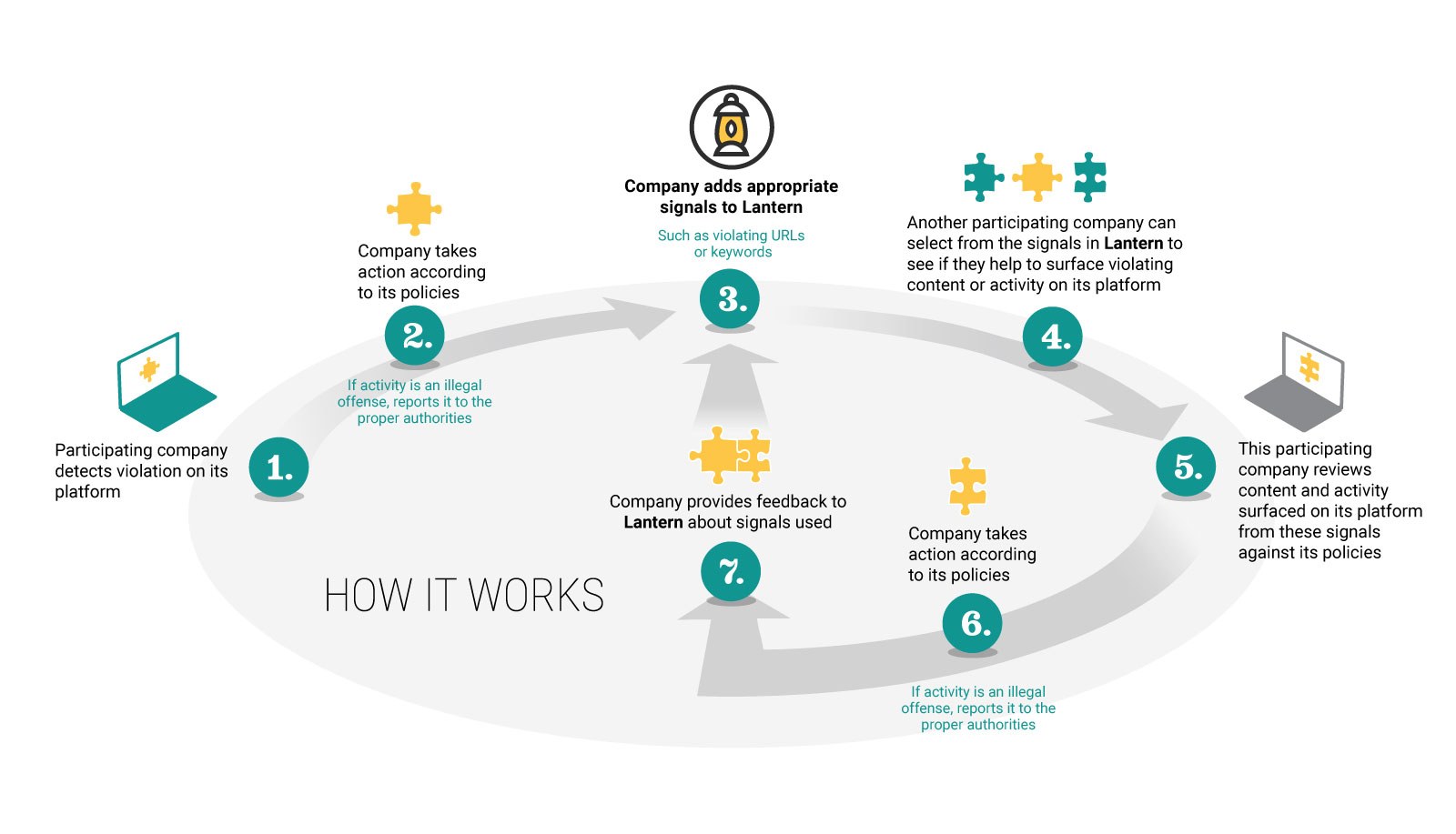

The Lantern programme was launched by Tech Coalition. (Image: Technology Coalition)

The Lantern programme was launched by Tech Coalition. (Image: Technology Coalition)

Individuals who engage in these activities target young users on public forums under the pretext of peers or simply as friendly connections. They later direct these unsuspecting users to private chats and different platforms to solicit, share child sexual abuse material, or force them to make payments by threatening to share their intimate images with the world.

Since these activities are not limited to a single platform, a company may not be able to fully grasp the gravity of the harm caused by these bad actors. According to Lantern, to comprehend the scale of such abuses, companies should work together to take proper actions. Lantern allows companies to collaborate to securely share signals about activity and accounts that violate their policies against CESA.

What are these signals?

According to the coalition, signals are information that is related to the accounts and profiles that violate the policies. These could be email addresses, child sexual abuse material hashes, or keywords that were used to groom, buy or sell such materials. It is to be noted that these signals could not be used as definitive proof of abuse. However, they may offer clues that can help with the investigation that may later help a company trace the perpetrators in real-time.

Before Lantern, there was no mechanism for companies to jointly act against such bad actors, which led to many of them evading detection. According to the coalition, Lantern can enhance the prevention and detection capabilities, speed up the process of identifying threats, build situational awareness, etc.

Story continues below this ad

How can companies share such signals?

The companies that are a part of the programme can upload the signals about activities that violate their policies against CSAE identified on their platform to Lantern. Similarly, other companies can choose from the signals on Lantern, run them on their platform, review activity and content that are similar to the signal and take action based on their own enforcement procedures. These actions could be removing an account or reporting criminal activity to the concerned authorities.

The coalition acknowledges that building cross-platform procedures can be tedious and time-consuming. They have been developing the program in the last two years by collaborating with tech companies. With the process in place, the Tech Coalition is also responsible for facilitating access as well as overseeing compliance with the programme. The coalition is committed to responsible management through safety and privacy by design, respecting human rights, transparency, and stakeholder engagement.

The Lantern programme was launched by Tech Coalition. (Image: Technology Coalition)

The Lantern programme was launched by Tech Coalition. (Image: Technology Coalition)