TikTok maker ByteDance unveils OmniHuman-1, a new AI tool that can make videos from a photo

Bytedance researchers say they trained OmniHuman-1 on about 18,700 hours of human videos.

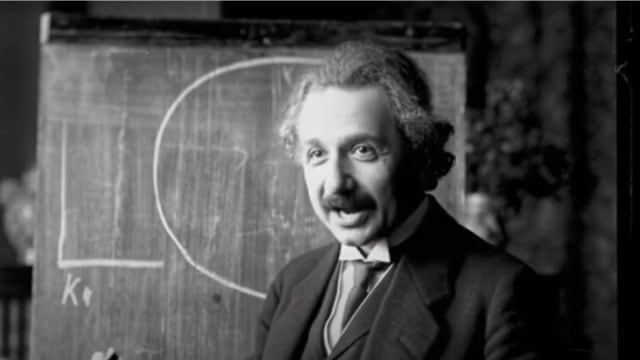

A still from a video of Albert Einstein talking generated by ByteDance's OmniHuman-1. (Image Source: ByteDance)

A still from a video of Albert Einstein talking generated by ByteDance's OmniHuman-1. (Image Source: ByteDance)TikTok’s parent company – ByteDance recently unveiled a new AI tool that can generate lifelike videos of people talking, playing instruments and more using a single photo. Dubbed OmniHuman-1, ByteDance says the new tool “significantly outperforms existing methods, generating extremely realistic human videos based on weak signal inputs, especially audio.”

In a research paper published on arXiv, the company claimed that the new tool can work with images with any aspect ratio, irrespective of whether they are portraits, half-body or full-body images and deliver “lifelike and high-quality results across various scenarios.”

This is a step up compared to other AI models, many of which can only change facial expressions or make humans speak. On the OmniHuman-1 page hosted on Beehiiv, researchers shared several sample videos showing how the tool performs with examples showing hand and body movements from multiple angles, and animals in motion.

In a black and white video, OmniHuman-1 shows the known scientist Albert Einstein talking in front of a blackboard performing hand gestures and showing facial expressions. ByteDance says it trained the new tool on more than 18,700 hours of human videos, combining it with various types of inputs like text, audio and physical poses.

The researchers also suggest that OmniHuman-1 currently outperforms similar systems across multiple benchmarks. OmniHuman-1 isn’t the first image-to-video generator, but ByteDance’s new tool may have some advantage over its competitor since it is likely trained on videos from TikTok.