In the early hours of May 8, missiles and drones were launched across India’s northern and western borders, originating from Pakistan. As India responded to these aerial incursions, another kind of salvo was underway — not of steel and fire, but of pixels and lies.

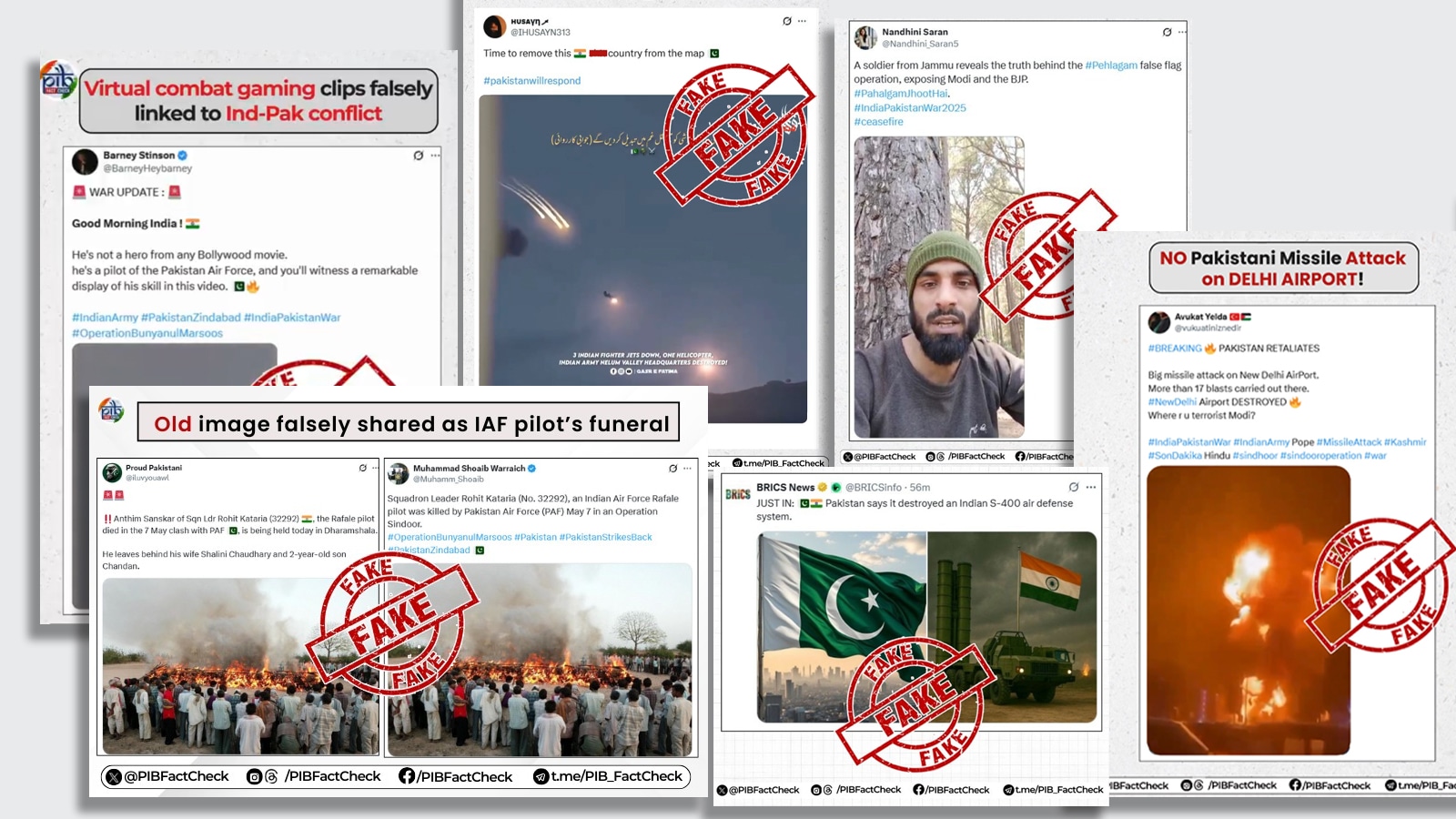

During the conflict, numerous social media accounts — some verified and others anonymous — shared a barrage of images and videos related to the events. Many of them were later identified as doctored, misrepresented, and unverified.

The visuals illustrated how, in the modern era, conflicts are no longer just about territory, but narrative.

Phase One: The bombardment of visuals

The propaganda offensive began almost simultaneously with the military action. An image of a fiery explosion was shared widely, with captions claiming an Indian Air Force base had been struck. It was an old image — from Kabul Airport, August 2021 — but had been strategically selected to evoke urgency and fear.

Old image from Kabul airport blast falsely claimed explosions at Jammu Air Base.

Old image from Kabul airport blast falsely claimed explosions at Jammu Air Base.

Hours later, another video emerged, purporting to show Hazira Port in Gujarat under attack. It was, in reality, footage of an oil tanker explosion from 2021. Next came a viral video of a supposed drone strike in Jalandhar. This too was a misfire of misinformation — in truth, it depicted a farm blaze, timestamped before any known drone activity.

Then came a video claiming a Pakistani battalion had destroyed an Indian post, supposedly manned by the ‘20 Raj Battalion’ — a unit that does not exist. The video was flagged and debunked by Indian fact-checkers.

According to the Centre for the Study of Organised Hate Crimes, a nonprofit, nonpartisan think tank based in Washington, video game footage was weaponised as “evidence” of military victories, in context of airstrikes and military engagements: “Footage from pre-existing games were edited with text overlays, patriotic soundtracks, and strategic commentary to create battlefield narratives that generated millions of views,” the organisation said in its report, Inside the Misinformation and Disinformation War Between India and Pakistan, published on May 16.

Story continues below this ad

Phase Two: The infrastructure of illusion

The actors behind these digital salvos are not lone trolls or misguided patriots. Increasingly, they are coordinated networks, employing the techniques of modern marketing: microtargeting, A/B testing, and algorithm gaming

“These are very structured and handled as part of strategic responses around conflicts,” says Subimal Bhattacharjee, a defence and cyber security analyst and former country head of General Dynamics, a global aerospace and defence company. “You would have dedicated teams building up content that’s then disseminated through pre-decided networks, including social media influencers, bot networks, and even paid criminal syndicates to amplify it. This is a kind of parallel warfare.”

Tarunima Prabhakar, co-founder of Tattle, a Delhi-based organisation focused on developing citizen-centric tools to track misinformation, agrees that the system is deliberately multi-platform and plays into algorithmic weaknesses across media. “There’s algorithmic amplification on social media. The most sensational, clickbaity content spreads fastest,” she says. “But even without algorithms, on apps like WhatsApp, people themselves become amplifiers — trying to be the first to share, to be the knowledgeable one in their network.”

What’s more, she adds, the overlap of television, social media, and encrypted messaging creates a feedback loop that reinforces emotional narratives over factual ones. “Each medium interacts with the other, whether television, social media, or messaging apps.”

Story continues below this ad

Data voids and the vacuum of trust

One of the most dangerous dynamics in the May escalation, Prabhakar says, was the role of data voids — a concept describing the information vacuum created when there is a scarcity of verified news on fast-moving events.

And in such situations, ordinary citizens are often caught in the crossfire of conflicting narratives. “The impulse is to panic-scroll, to seek clarity through more consumption. But in this case, the better response might have been to tolerate not knowing,” she says. “People needed to be okay with knowing less — to not let anxiety force them into the arms of misinformation.”

The psychology of panic

Dr Itisha Nagar, a Delhi-based psychologist who studies mass behaviour in high-anxiety scenarios, argues that misinformation doesn’t just exploit confusion; it provides temporary emotional relief.

“Rumours are not just idle talk; they serve a deep psychological purpose,” she says. “In times of conflict, they reduce uncertainty and give a false sense of control.”

Story continues below this ad

People don’t necessarily share information because it’s true; they share it because it resonates, Dr Nagar says. “We are social beings. Rumours help us connect, express concern, and bond emotionally, even if the facts are wrong.”

Prabhakar reinforces this point from the technological side. “In high-emotion events, you have motivated reasoning. People believe what aligns with their ideology. They’re not looking for facts; they’re looking for affirmation.”

Phase three: The damage done

What makes digital misinformation so potent during conflicts is its latency — the delay between lie and debunking. A falsehood spreads at the speed of a click; the truth chases it uphill. By the time fact-checkers weigh in, the damage is done: fear escalates, public opinion hardens, and policy discussions are distorted.

This latency is magnified by encrypted platforms, where virality cannot be easily monitored or countered.

Story continues below this ad

Once again, Dr Nagar warns that the emotional environment created by misinformation is as harmful as the content itself. “It’s counterintuitive, but sharing a rumour can feel like quenching a thirst for predictability, even though it’s like drinking saltwater. The more you consume, the thirstier and more anxious you become.”

“Telling people to ‘stay calm’ in a high-anxiety situation without giving them real information is like putting a sitting duck in a storm and asking it not to shiver,” says Dr Nagar.

That’s not all, social media also has an impact on diplomacy. Nandan Unnikrishnan, Distinguished Fellow at the Observer Research Foundation in New Delhi, says all governments are sensitive to public opinion. “However, it is difficult to distinguish what is real.”

“In India, what you see online is often the view of less than 20% of the population, but those voices end up influencing public perception,” he says.

Story continues below this ad

This distortion effect, he warns, turns digital platforms into what he calls a “different zoo” — an unpredictable ecosystem where anonymous handles, state-run accounts, and bots coexist with genuine public voices. “It’s very difficult to distinguish who’s a tool and who’s real.”

The stakes are particularly high when governments are active participants in this ecosystem, not just as targets but as the sources of content. “Officials should be careful when they post from personal accounts or under dual-use accounts — where it’s not always clear if it’s the state speaking, or an individual,” Unnikrishnan says.

The counteroffensive

Authorities across the world are working towards countering misinformation and disinformation. Cyber commands now sit beside infantry divisions. Fact-checking has become a core pillar of national defence. India’s PIB Fact Check unit has moved swiftly to debunk false narratives, but the sheer volume of misinformation makes this a Sisyphean task.

For citizens, Prabhakar offers one final suggestion: humility in uncertainty. “The healthiest thing we could have done,” she says, “was to be okay with not knowing everything. That, more than anything, might be the antidote to disinformation in the fog of digital war.”

Old image from Kabul airport blast falsely claimed explosions at Jammu Air Base.

Old image from Kabul airport blast falsely claimed explosions at Jammu Air Base.