In an attempt to check the “growing misuse of synthetically generated information, including deepfakes,” the Centre has proposed draft rules that require mandatory labelling of AI-generated content on social media platforms like YouTube and Instagram. The social media platforms will be required to seek a declaration from users on whether the uploaded content is “synthetically generated information”.

According to the draft amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, platforms that allow creation of AI content will be required to ensure that such content is prominently labelled or embedded with a permanent unique metadata or identifier. In case of visual content, the label should cover at least 10 per cent of the total surface area, and in case of audio content, it should cover the initial 10 per cent of the total duration.

A senior government official said that AI content generated through platforms like OpenAI’s Sora, and Google’s Gemini would come under the ambit of these rules, and they would need to add a label and metadata to synthetic content.

In effect, once this draft is finalised, an AI video on a platform like YouTube, for instance, would carry two separate labels — one embedded in the video itself (added at the time of creation), and another on the YouTube page hosting the video.

“In Parliament as well as many other fora, people have demanded that something should be done about the deepfakes which are harming society, people using somebody’s, some prominent person’s image and creating deepfakes which are then affecting their personal lives, privacy, as well as the various misconceptions in society. So the step we’ve taken is to make sure that users get to know whether something is synthetic or real. Once users know, they can take a call in a democracy. But it’s important that users know what is real. That distinction will be led through mandatory data labelling,” IT Minister Ashwini Vaishnaw said.

In an explanatory note, the IT Ministry said: “Recent incidents of deepfake audio, videos and synthetic media going viral on social platforms have demonstrated the potential of generative AI to create convincing falsehoods — depicting individuals in acts or statements they never made. Such content can be weaponised to spread misinformation, damage reputations, manipulate or influence elections, or commit financial fraud.”

As per the draft amendments, social media platforms would have to get users to declare whether the uploaded content is synthetically generated; deploy “reasonable and appropriate technical measures”, including automated tools or other suitable mechanisms, to verify the accuracy of such declaration; and, where such declaration or technical verification confirms that the content is synthetically generated, ensure that this information — that the content is synthetically generated — is clearly and prominently displayed with an appropriate label or notice.

Story continues below this ad

If they fail to comply, the platforms may lose the legal immunity they enjoy from third-party content, meaning that the responsibility of such platforms shall extend to taking reasonable and proportionate technical measures to verify the correctness of user declarations and to ensure that no synthetically generated information is published without such declaration or label.

The draft amendments introduce a new clause defining synthetically generated information as “information that is artificially or algorithmically created, generated, modified or altered using a computer resource, in a manner that appears reasonably authentic or true”.

The ministry has sought feedback/ comments on the draft amendments until November 6.

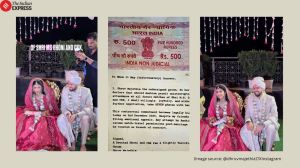

A deepfake is a video that has been digitally altered, typically used to spread false information. In the Indian context, the issue first surfaced in 2023, when a deepfake video of actor Rashmika Mandanna entering an elevator went viral on social media. Close on the heels of that incident, Prime Minister Narendra Modi had called deepfakes a new “crisis”.

Story continues below this ad

Last month, China too rolled out its AI labelling rules, under which content providers must now display clear labels to identify material created by artificial intelligence. Visible AI symbols are required for chatbots, AI writing, synthetic voices, face swaps and immersive scene editing. For other AI-based content, hidden tags such as watermarks will suffice. Platforms must also act as monitors — when AI-generated content is detected or suspected, they must alert users and may apply their own labels.