While it seems like Apple designed the button primarily to turn on Visual Intelligence, the camera functionality feels to be an afterthought. This becomes clearer to me with Visual Intelligence, Apple’s own system-native visual search tool, coming to the iPhone 16 series as part of the latest iOS 18.2 developer beta. It’s in preview for now, and won’t be ready for public release for a couple of months.

I briefly tested Visual Intelligence on my iPhone 16 Pro, and here are my first impressions.

What is Visual Intelligence?

Visual Intelligence is only available on the iPhone 16 series. (Image credit: Anuj Bhatia/The Indian Express)

Visual Intelligence is only available on the iPhone 16 series. (Image credit: Anuj Bhatia/The Indian Express)

Think of Google Lens, but deeply integrated into Apple devices. This is the simplest and most accurate representation of Visual Intelligence. It’s a native visual search tool that allows iPhone users to search for information directly from the camera. You might wonder why not just use Google Lens instead of Visual Intelligence, especially since Google’s image recognition feature is built right into the Google Search app on your iPhone — and you’d be right to ask.

However, not every iPhone owner uses all of Google’s products. I rarely use Google Lens on my iPhone, even though I utilise Google-made apps frequently. But even if I did switch to Google Lens, there are too many hassles. There are two ways to use Google Lens, with two different apps. One is through the Google app, which analyses imagery in real-time via your phone’s camera without taking a photo. The other method is through the Google Photos app, which analyses the photos after they’ve been taken.

I’m not going to think about which method to use for Google Lens when I’m standing in front of a vintage shop in Old Delhi. I want a solution which is simple and delivers the information I need quickly. That alone justifies why Apple introduced Visual Intelligence in the first place.

iPhone 16’s Camera Control button feels perfect for Visual Intelligence

The iPhone 16’s Camera Control button is the gateway to Visual Intelligence. (Image credit: Anuj Bhatia/Indian Express)

The iPhone 16’s Camera Control button is the gateway to Visual Intelligence. (Image credit: Anuj Bhatia/Indian Express)

Apple may be heavily marketing the new iPhone 16’s (review) control button for taking pictures, and it’s everywhere in their adverts and campaigns. However, I feel the best way to use the Camera Control button, as Apple calls it — whether for changing settings, adjusting styles, or zooming in on subjects you’re photographing— is through Visual Intelligence. Now that I’ve tried the feature, it’s clear that the Camera Control button is primarily designed to activate Visual Intelligence.

Story continues below this ad

I don’t have to dig into the Google app, and find Google Lens. All I have to do is press and hold the button, and point the camera at the subject — from seeking information about a product to finding out about the model and the year of a car I spotted on the street, and even a restaurant in a new city before making a reservation.

If you have ever used Google Lens, Visual Intelligence won’t seem very different. The idea is to provide contextual information about anything you point the iPhone’s camera at. As I mentioned, the functionality is similar to Google Lens, but the iPhone 16’s Camera Control makes Visual Intelligence easier to use. I’m pretty sure even hardcore Android users don’t frequently use Google Lens, for reasons I have explained before.

Visual Intelligence may not be a step up over Google Lens, but that was never Apple’s intention. However, the iPhone 16’s Control Button does make it more accessible for visual search on the device, which a lot of users might not be doing.

Visual Intelligence is simple, and it works

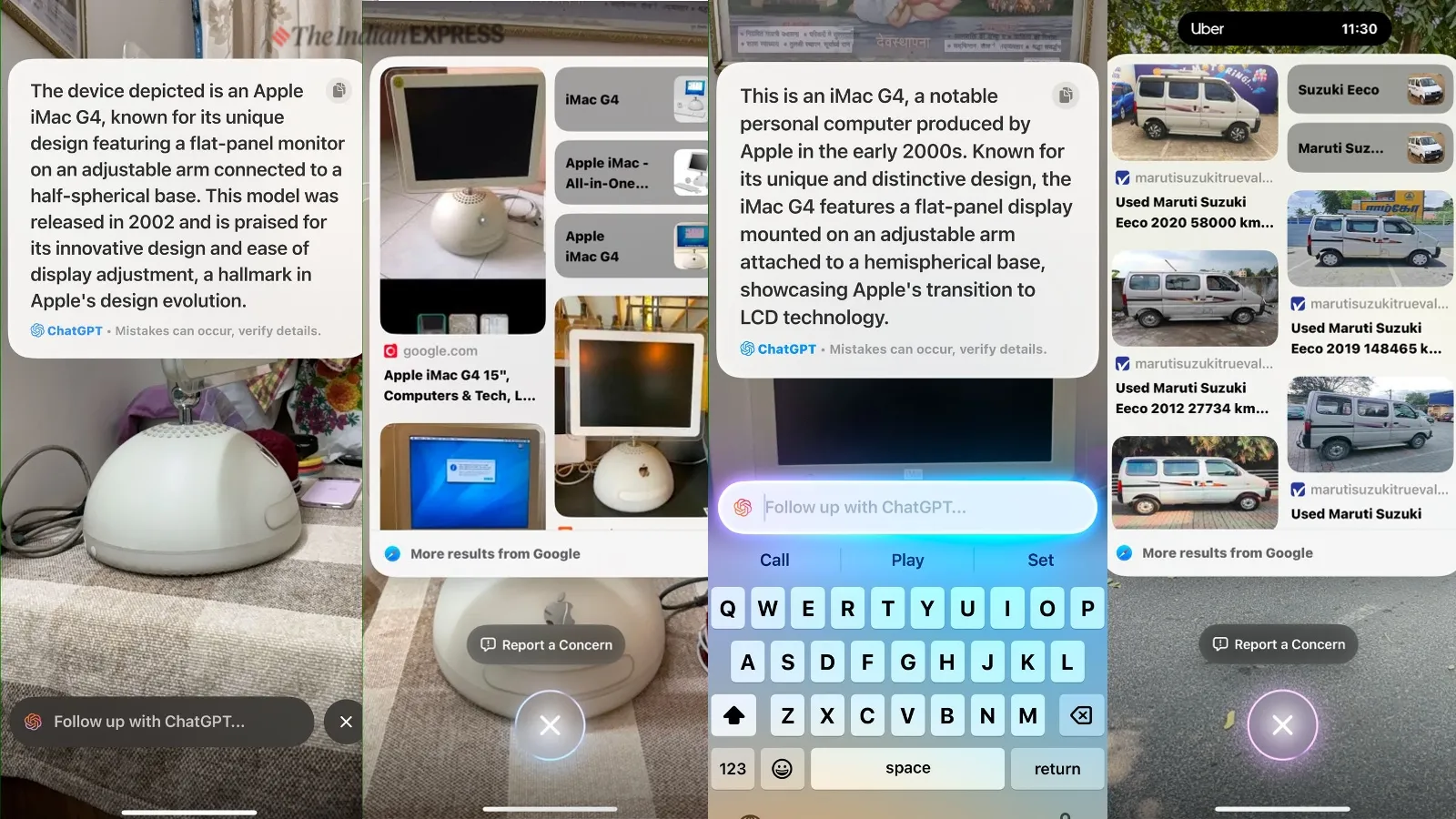

Based on my brief use of Visual Intelligence, I realised that Apple’s alternative to Google Lens has fewer features — similar to the first wave of Apple Intelligence: surface-level, but functional. To test whether Visual Intelligence works as advertised, I clicked and held the Camera Control button on my iPhone 16 Pro, pointed the camera at my iMac G4, and within seconds, Visual Intelligence provided search results. Not only did it identify the computer, but it also offered a few videos and eBay listings.

Story continues below this ad

Apple uses its resources to power Visual Intelligence but also integrates Google Search and OpenAI’s ChatGPT via third-party connections. This is great because, as a user, I get the best of both worlds: ChatGPT provides text-based information about the product. I can also follow up and get more information about the history of the iMac G4. For example, Google offers a more visual approach to product searches — images, YouTube videos, and eBay listings. Apple also promises more privacy as it emphasises that it would “never store any image” taken while conducting a visual search.

My take on Visual Intelligence

I will continue testing Visual Intelligence over the next few days to learn more about the Apple tool. Regardless of the divided opinions about the iPhone 16’s Camera Control button, I believe that the physical button holds a lot of potential to unlock various features on the iPhone. Visual Intelligence will evolve as more people begin using it.

However, I feel Visual Intelligence has great potential to become a hit among businesses. It remains to be seen how brands would leverage Visual Intelligence to enhance visual search for marketing and create new touch points. The integration of Apple Maps could also play a significant role in improving Visual Intelligence in future by enhancing discoverability and engagement with targeted content.

Visual Intelligence is only available on the iPhone 16 series. (Image credit: Anuj Bhatia/The Indian Express)

Visual Intelligence is only available on the iPhone 16 series. (Image credit: Anuj Bhatia/The Indian Express) The iPhone 16’s Camera Control button is the gateway to Visual Intelligence. (Image credit: Anuj Bhatia/

The iPhone 16’s Camera Control button is the gateway to Visual Intelligence. (Image credit: Anuj Bhatia/