CES 2025: Everything revealed by Nvidia CEO Jensen Huang in his keynote speech

Here’s a look at all the new GPU hardware and AI software introduced by Nvidia at CES 2025.

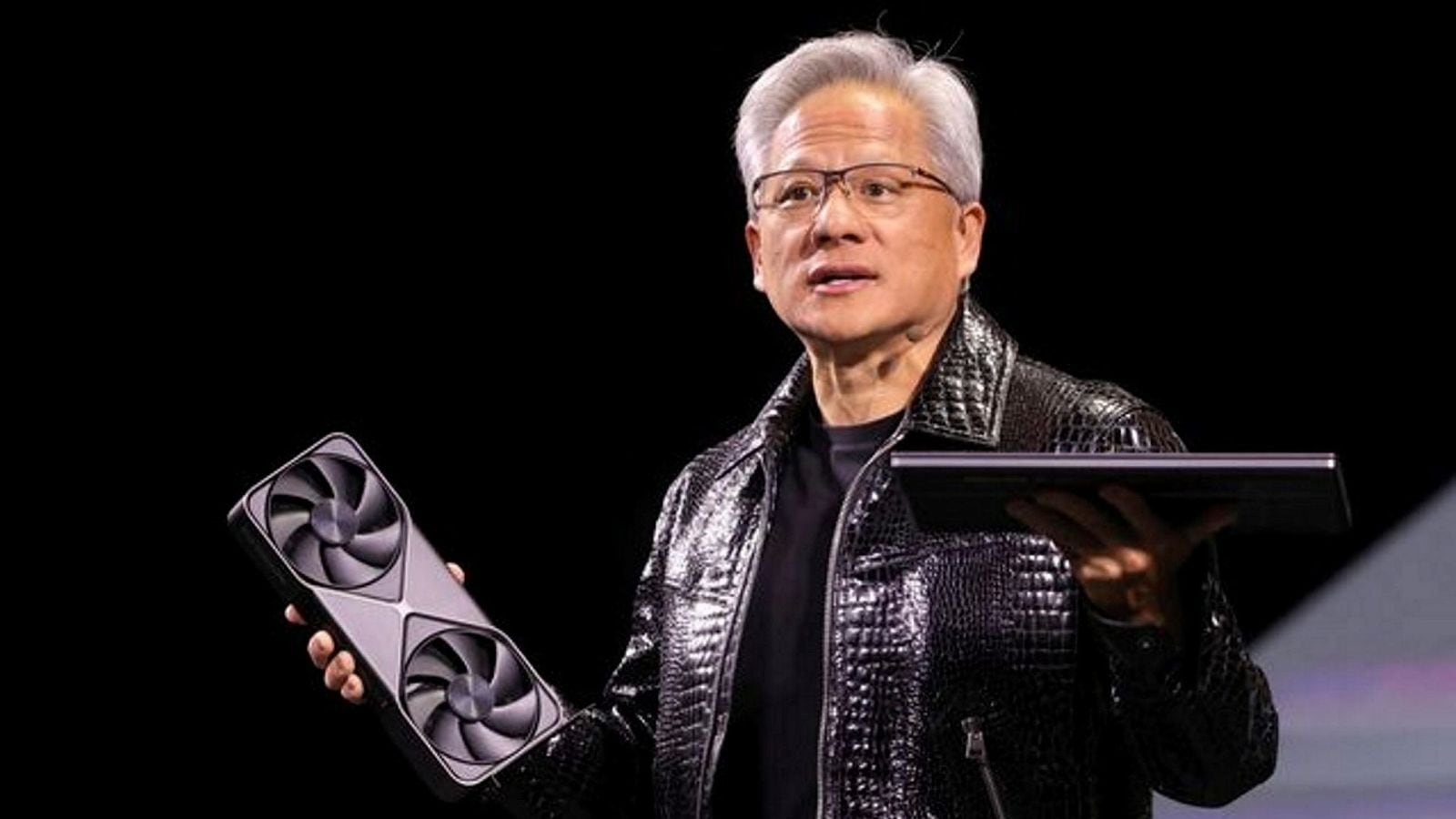

Nvidia CEO Jensen Huang holds a new Nvidia GeForce RTX 5090 graphics card and an RTX 5070 laptop as he gives a keynote address at CES 2025, an annual consumer electronics trade show, in Las Vegas, Nevada, U.S. January 6, 2025. (Image: Reuters)

Nvidia CEO Jensen Huang holds a new Nvidia GeForce RTX 5090 graphics card and an RTX 5070 laptop as he gives a keynote address at CES 2025, an annual consumer electronics trade show, in Las Vegas, Nevada, U.S. January 6, 2025. (Image: Reuters)Nvidia CEO Jensen Huang’s keynote address at CES 2025 was full of AI announcements, with the 61-year-old unveiling the chip giant’s latest line of gaming chips, an AI model to train robots and self-driving cars, its first desktop computer, and much more.

Taking the stage before a packed audience in Las Vegas, Nevada, US, Huang delivered a two-hour presentation that outlined Nvidia’s 2025 game plan and set the tone for the major annual tech conference that will run from January 7 to 10.

Nvidia’s rocketing value has been driven by demand for its processors that are powering the ongoing AI revolution. Ahead of Huang’s CES keynote, the company’s shares closed at a record high $149.43 with a market cap of $3.66 trillion, meaning that Nvidia is still the second-most valuable company in the world, trailing behind Apple.

Here’s a look at all the new GPU hardware and AI software introduced by Nvidia at CES 2025.

RTX 50-series GPUs

Nvidia officially announced its latest line of GPUs called the RTX-50 series at CES 2025 on Tuesday. The next-generation RTX GPUs will run on the company’s flagship Blackwell architecture. The RTX 40-series of GPUs were based on its Ada Lovelace architecture.

Huang unveiled four next-gen GPUs, namely: RTX 5070, RTX 5070 Ti, RTX 5080, and RTX 5090 with prices ranging from $549 to $1,999. The RTX 5090 and RTX 5080 GPUs will be available from January 30 onwards, followed by the release of the other two GPUs in February this year.

Nvidia’s latest GPU series come with a new design that includes two double flow-through fans, a 3D vapour chamber, and GDDR7 memory. All four of the graphic cards are equipped with DisplayPort 2.1b connectors to drive displays up to 8K and 165Hz.

The size of the RTX 5090 GPU is significantly trimmed down from its predecessor (the RTX 4090) and is reportedly capable of fitting inside a small-form factor PC. However, the total graphics power (575 watts) of the RTX 5090 is 125 watts more than that of the RTX 4090.

Nvidia’s RTX 50-series of GPUs will also be integrated into laptops, which are expected to be made available by various PC makers from March onwards.

During his keynote, Huang showed off the capabilities of the RTX-50 GPU series through a real-time rendering demonstration of key features such as RTX Neural Materials, RTX Neural Faces, text-to-animation, and even DLSS 4.

DLSS 4 with multi frame generation

Nvidia announced that it is upgrading its Deep Learning Super Sampling (DLSS) technology with new neural rendering capabilities such as Multi Frame generation on systems running the RTX-50 series. It will generate “up to three additional frames per traditionally rendered frame, working in unison with the complete suite of DLSS technologies to multiply frame rates by up to 8X over traditional brute-force rendering.”

“The new generation of DLSS can generate beyond frames, it can predict the future. […] We used GeForce to enable AI, and now AI is revolutionizing GeForce,” Huang said in his keynote.

The DLSS 4 upgrade will work on existing RTX GPUs as well.

Cosmos world foundation models

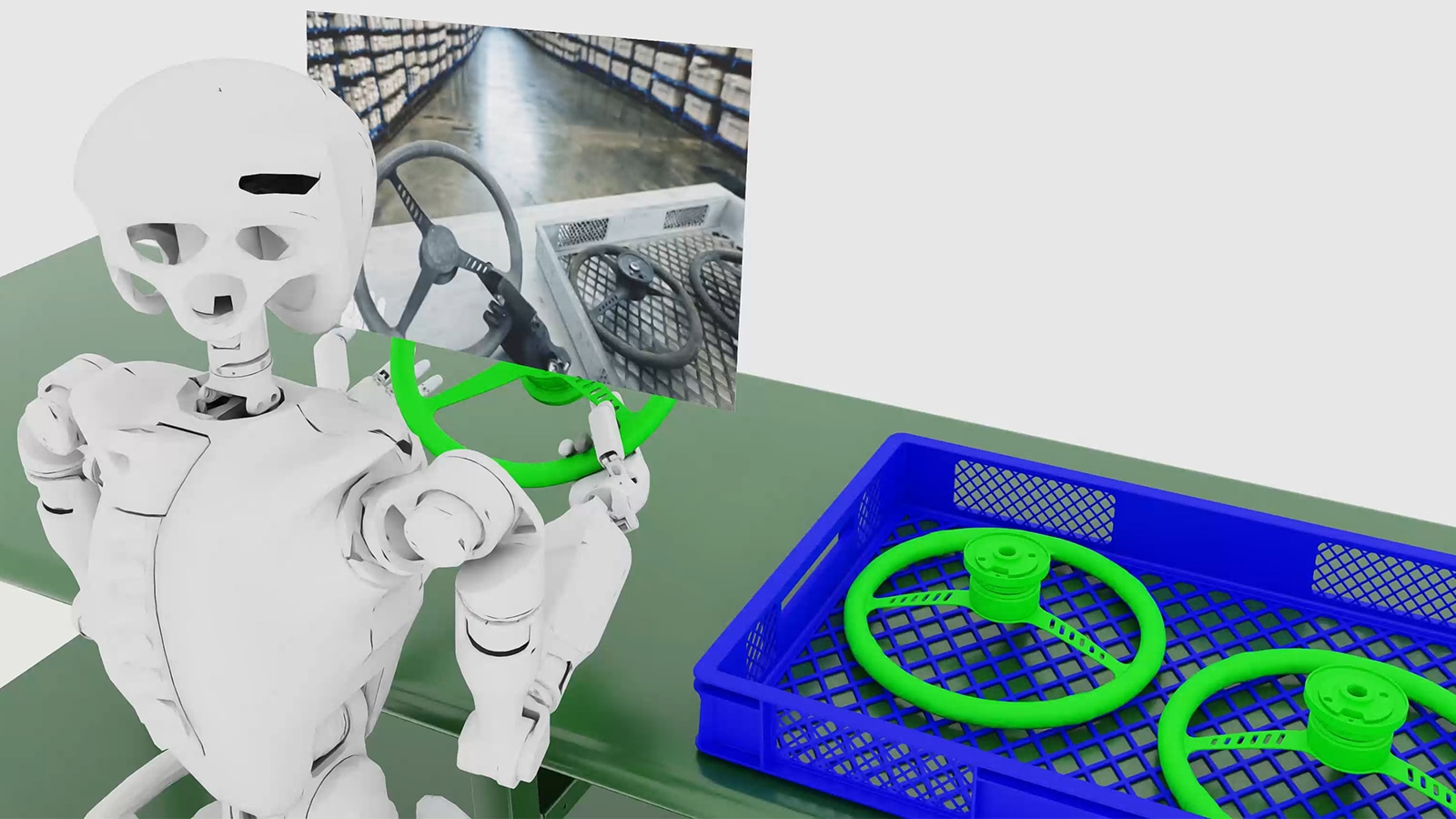

Nvidia has announced a new set of AI models called Cosmos that can be used to generate photo-realistic videos in order to train robots and autonomous vehicles. Users, for instance, will be able to prompt Cosmos to generate a video of a vehicle being driven in an environment that follows the laws of physics.

The synthetically generated data is expected to help robotics companies and AV makers cut down the costs of gathering conventional, real-world data. “We are going to have mountains of training data for autonomous vehicles,” Huang said, adding that data collection from “cars on the road” will continue to be needed.

Nvidia is releasing Cosmos under an “open licence”. “We really hope (Cosmos) will do for the world of robotics and industrial AI what Llama 3 has done for enterprise AI,” Huang said.

Cosmos models can generate physics-based videos from a combination of inputs, like text, image and video, as well as robot sensor or motion data. (Image credit: Nvidia)

Cosmos models can generate physics-based videos from a combination of inputs, like text, image and video, as well as robot sensor or motion data. (Image credit: Nvidia)

Huang also announced a new partnership with Toyota. The Japanese automobile major will reportedly use its Orin AI chips and automotive operating system to provide advanced driver assistance in certain models of its cars.

Project Digits

Nvidia announced its first-ever desktop computer called Project Digits that has been designed for AI researchers, data scientists, and students. It costs $3,000, runs on a Linux-based operating system, and packs a new GB10 Grace Blackwell Superchip that delivers a computing performance intended for running and fine-tuning AI models up to 200 billion parameters in size.

NVIDIA’s Project DIGITS supercomputer with New GB10 Superchip. (Image credit: Nvidia)

NVIDIA’s Project DIGITS supercomputer with New GB10 Superchip. (Image credit: Nvidia)

“[Project Digits] runs the entire Nvidia AI stack — all of Nvidia software runs on this. […] It’s a cloud computing platform that sits on your desk … It’s even a workstation, if you like it to be,” Huang said onstage.

Project Digits will become available in March 2025.

Llama Nemotron family of models

Nvidia revealed a new family of open-source large language models (LLMs) that have been built using Meta’s foundational AI model Llama. As a result, the chip giant’s new model series has been christened Llama Nemotron. It can be used by enterprises to create and deploy AI agents for a range of applications such as customer support, fraud detection, and product supply chain and inventory management optimisation.

Llama Nemotron models have been trained on high-quality datasets using Nvidia’s latest pruning techniques, the company said in a press release. They will be available in Nano, Super, and Ultra sizes in order to help businesses deploy AI agents at scale. The differences between the three sizes are mostly to do with latency, accuracy, and performance.

Nvidia has said that software firms SAP and ServiceNow will be among the first to use the new Llama Nemotron models. It can be downloaded from build.nvidia.com and Hugging Face. The AI model family can also be deployed across an enterprise’s cloud, data centres, PCs, and workstations using Nvidia’s existing NIM microservices.

Nvidia Mega

Mega is Nvidia’s newly released robotic fleet management software for warehouses. It is an ecosystem that can be used to make robots used in a particular warehouse work in tandem with each other. Mega can be used to manage various types of robots such as AMRs, robotic arms, autonomous forklifts, and even humanoids.

“Mega offers enterprises a reference architecture of Nvidia accelerated computing, AI, Nvidia Isaac and Nvidia Omniverse technologies to develop and test digital twins for testing AI-powered robot brains that drive robots, video analytics AI agents, equipment and more for handling enormous complexity and scale,” Nvidia said.

“The new framework brings software-defined capabilities to physical facilities, enabling continuous development, testing, optimization, and deployment,” it added.

Mega has reportedly been adopted by a German supply chain company called Kion Group to optimise its workflows.

Autonomous, AI game characters

In 2023, Nvidia released a suite of RTX-powered technologies called Ace to create game characters using generative AI. Now, the company is building on its Ace capabilities to help create autonomous game characters that use AI to perceive, plan, and act like human players.

These autonomous game characters will be powered by a group of multi-modal, small language models that will allow them to “hear audio cues and perceive their environment.”

Nvidia said it has partnered with game developers to deploy these autonomous game characters in popular titles such as Pubg: Battlegrounds, inZOI, and Naraka: Bladepoint mobile PC version. The company also announced that its AI virtual assistant G-Assist will be rolled out to GeForce RTX users in February via the Nvidia app.