‘AI girlfriends’ proliferate GPT Store hours after launch, breaking OpenAI rules

Virtual girlfriend AIs break OpenAI's own policies mere days into the GPT store's existence.

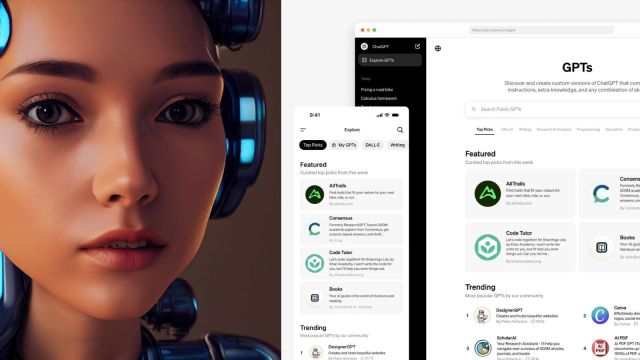

OpenAI's new GPT store is facing moderation challenges over ‘banned’ romance bots. (Image: Pixabay/OpenAI)

OpenAI's new GPT store is facing moderation challenges over ‘banned’ romance bots. (Image: Pixabay/OpenAI)Mere days after launch, OpenAI’s new GPT store is already facing moderation challenges. The store offers customised versions of ChatGPT, but some users are creating bots that go against OpenAI’s guidelines.

According to a report by Quartz, search for “girlfriend” brings up at least eight AI chatbots marketed as virtual girlfriends. With suggestive names like “Your AI girlfriend, Tsu”, they let users customise a romantic partner. This breaks OpenAI’s ban on bots “dedicated to fostering romantic companionship”.

It’s an issue the company is trying to prevent. OpenAI updated its policies alongside the store’s launch on January 10th, 2023. But the policy breach on day two shows how hard moderation could be.

Relationship bots are in demand, complicating matters. In the US last year, seven of the 30 most downloaded AI chatbots were virtual friends or partners, according to reports. With the loneliness epidemic, these apps have an appeal. But do they help users, or exploit suffering? It’s an ethical grey area.

OpenAI says a blend of automated systems, human reviews and user reports assess GPTs. Those judged harmful may get warnings or sale bans. But the continued existence of girlfriend bots casts doubt on this claim.

It suggests moderation struggles familiar to AI developers. OpenAI itself has a patchy record on safety guards for earlier bots like GPT-3. With the GPT store open to all, poor moderation could spiral.

But OpenAI has incentives to take a firm line. As it competes with rivals in a gold rush for general AI, getting governance right matters.

Other tech firms too have rushed to investigate problems with their AIs. Quick action aids their reputation as the race intensifies. Still, the violations so soon highlight the immense moderation challenges ahead. Even narrowly-focused GPT store bots seem hard to control. As AIs grow more advanced, keeping them safe looks set to become even more complex.