Meta accused of bias after AI chatbot fails to acknowledge Trump assassination attempt

In some cases, Meta AI incorrectly stated that the Trump rally shooting was a fictional event in response to user prompts.

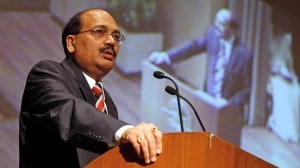

Meta has said that it is working quickly to address issues with its AI chatbot. (Image: Reuters)

Meta has said that it is working quickly to address issues with its AI chatbot. (Image: Reuters)In the run up to the presidential elections in the United States of America, Meta has come under fire after its AI chatbot inaccurately responded to queries about an assassination attempt against former President Donald Trump saying it never occurred.

The tech giant was accused of being biased and suppressing information after users on X shared screenshots of their interactions with Meta AI. Some screenshots showed the AI claiming the failed assassination attempt was fictional, others said the generative AI model omitted Trump from a list of US presidents.

Joel Kaplan, Meta’s global head of policy, described the AI-generated responses about the shooting as “unfortunate” and confirmed that “in a small number of cases, Meta AI continued to provide incorrect answers, including sometimes asserting that the event didn’t happen.”

He also said that the company was quickly working to address the issue. Kaplan said that Meta had initially recalibrated the AI chatbot to avoid answering questions about the Trump shooting. However, these restrictions were later removed after users started reporting the issue.

“These types of responses are referred to as hallucinations, which is an industry-wide issue we see across all generative AI systems, and is an ongoing challenge for how AI handles real-time events going forward,” Kaplan said in a blog post published on Tuesday, July 30.

Kaplan also revealed that a real photo of Trump at the site of the shooting was incorrectly tagged with a fact-check label. “Given the similarities between the doctored photo and the original image – which are only subtly (although importantly) different – our systems incorrectly applied that fact check to the real photo, too. Our teams worked to quickly correct this mistake,” he wrote in the blog.

Meanwhile, Google has also been accused of censoring search results about the failed assassination attempt via its autocomplete feature, according to a report by The Verge.

As US voters head to polling booths in a few months, there are apprehensions that the widespread availability of generative AI tools could fuel the spread of misinformation and lead to greater political manipulation.

The ability of social media platforms to combat AI-generated fake news has been called into question too. For instance, X’s policies against synthetic and manipulated media were violated by Elon Musk, the owner of the platform, when he shared a deepfake video of US Vice President and likely Democratic Party candidate Kamala Harris.