A fake image showing a large explosion near the Pentagon went viral on Monday, causing much confusion as a wave of social media accounts — including multiple verified ones — amplified its circulation. Making matters worse was the fact that several top publications fell for it and picked the ‘incident’ as well.

Amid all-time high levels of misinformation circulating on social media, AI is making fake news harder to fact-check than ever before. And as the technology advances, we can expect these cases to grow. However, for now, there are some ways to detect if an image is AI-generated and avoid the embarrassment of spreading false information.

What gives the Pentagon blast image away?

First, let’s take a closer look at the Pentagon explosion image. Bellingcat, an online news verification group, has pointed out several irregularities with the image that can be applied to other similar images.

The group has pointed out how the frontage of the building is distorted, with the fence melding into the crowd barriers. More importantly, there are no other images, videos, or people posting as first-hand witnesses, which is highly unusual for an event of this scale.

How to spot fake AI-generated images

But there are other techniques for spotting AI-generated images too. Let’s take a look.

Check for on-ground reports

In the case of a big event, reporters will quickly swarm the place to present an on-ground report of the incident. This never happened with the now-debunked news of the Pentagon blast. While realistic images are fairly easy to produce thanks to the onset of tools like Midjourney, Dall-E, and Stable Diffusion, on-ground reporting is near impossible to replicate, making it difficult to create a convincing fake.

Story continues below this ad

Verify your sources

On Twitter, a blue tick no longer acts as a mark of authenticity, meaning troll accounts that often spread misinformation can feature them too. It is thus crucial to go through the feed of the account in question to ensure that they have a clean track record. Also, ensure that their location and the location of the event add up.

Use reverse image search tools

If none of the signs that typically give AI-generated images away is apparent, try running a reverse image search to arrive at the source that first shared them. Tools like Google Lens and TinEye do a great job at assisting with this. Once you’ve found where the image appeared for the first time, you can then verify the source as directed above.

Check the hands

An output fetched with the prompt “a man drinking a bottle of coke” on Bing Image Creator. Note that the man has 6 fingers. (Express image)

An output fetched with the prompt “a man drinking a bottle of coke” on Bing Image Creator. Note that the man has 6 fingers. (Express image)

Hands are complex parts of the human body, with a very dense concentration of joints in a small area. This poses a challenge for artificial intelligence (AI) systems that have not been trained with enough data to capture the nuances of hand shapes and movements. As a result, AI-generated images often distort the hands of human subjects, adding or removing fingers or twisting them unnaturally. This flaw can help you spot a fake image when other body parts look realistic.

Check for distortions

When an image has many subjects or a busy scene, some objects may blend or overlap with others. For example, a building in the background might merge with a lamppost, or a person’s foot might distort into the pavement they are walking on.

Story continues below this ad

Analyse the surroundings

AI only has a vague idea about what locations it’s trained on actually looks like. Therefore, just like a human attempting to draw a place from memory, it doesn’t get the details right. For instance, the Pentagon blast image that went viral features a fake location. If a location featured in an image looks familiar, try to search for landmarks and road signs to verify authenticity.

Search for text

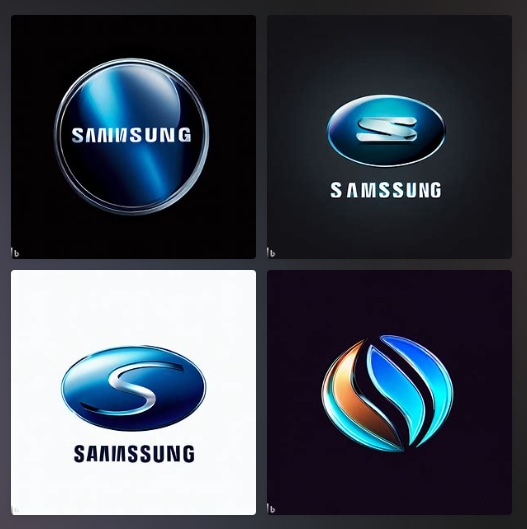

What the Samsung logo looks like according AI. (Express image)

What the Samsung logo looks like according AI. (Express image)

Text and logos are currently AI image generators’ biggest weakness – use any of those tools for generating word art and you’ll quickly realise that it’s impossible to do so. At best, you’ll be able to get the AI to generate a single alphabet properly, but full words are almost always an unreadable mess. If an image features an urban landscape, try to search for hoardings, road signs, or any other objects that feature text.

Use AI image detectors

While not always accurate, AI image detectors can serve as useful tools to spot fakes when paired with the other methods mentioned in this list. Maybe’s AI Art Detector is a great place to get started.

An output fetched with the prompt “a man drinking a bottle of coke” on Bing Image Creator. Note that the man has 6 fingers. (Express image)

An output fetched with the prompt “a man drinking a bottle of coke” on Bing Image Creator. Note that the man has 6 fingers. (Express image) What the Samsung logo looks like according AI. (Express image)

What the Samsung logo looks like according AI. (Express image)