Keep a tab on the tech section of The Indian Express for more AI news coming out of Google I/O 2025.

Google I/O 2025 Event Live Stream Updates: Sundar Pichai speaking about the new AI update in his keynote. (Image: Nandagopal Rajan/The Indian Express)

Google I/O 2025 Event Live Stream Updates: Sundar Pichai speaking about the new AI update in his keynote. (Image: Nandagopal Rajan/The Indian Express)Google I/O 2025 Live Updates: Google kicked off its annual I/O developer conference today (May 20) at Mountain View, California, US, with an opening keynote that was about AI (at least for the most part).

In a long stream of announcements, the search giant drew the curtains back on the new AI products it has been working on over the past few months, including an AI tool for filmmaking, an asynchronous AI coding agent, an AI-first 3D video communication platform, and more.

It introduced upgraded versions of existing AI models and tools such as Gemini 2.5 Flash and Pro, Imagen 4, Veo 3, and Lyria 2, as well as new updates to AI Mode in Search, Deep Research, Canvas, Gmail, Google Meet, etc. Google also announced two new AI subscription plans, the AI Pro plan that will be available globally at $19.99 per month and the pricier $249 per month AI Ultra plan that is being rolled out in the US, with more countries coming soon.

But Google I/O 2025 was not just about AI either. We got a glimpse of how Android XR-powered smart glasses will work in real-world scenarios such as messaging friends, taking photos, or asking Gemini for turn-by-turn directions.

The Indian Express’ Nandagopal Rajan is on-site at the event, bringing you the news as it breaks. Stay tuned for more coverage of Google I/O 2025.

"More intelligence is available for everyone, everywhere. And the world is responding, adopting AI faster than ever before…,” Google CEO Sundar Pichai said, adding that the company has released over a dozen foundation models since the last edition of the conference. You can read the full on-ground, news report of The Indian Express here.

Here's an easy-to-digest list of everything that was announced during the opening keynote of Google I/O 2025:

- Updates to Gemini 2.5 Flash and Pro models, including native audio outputs

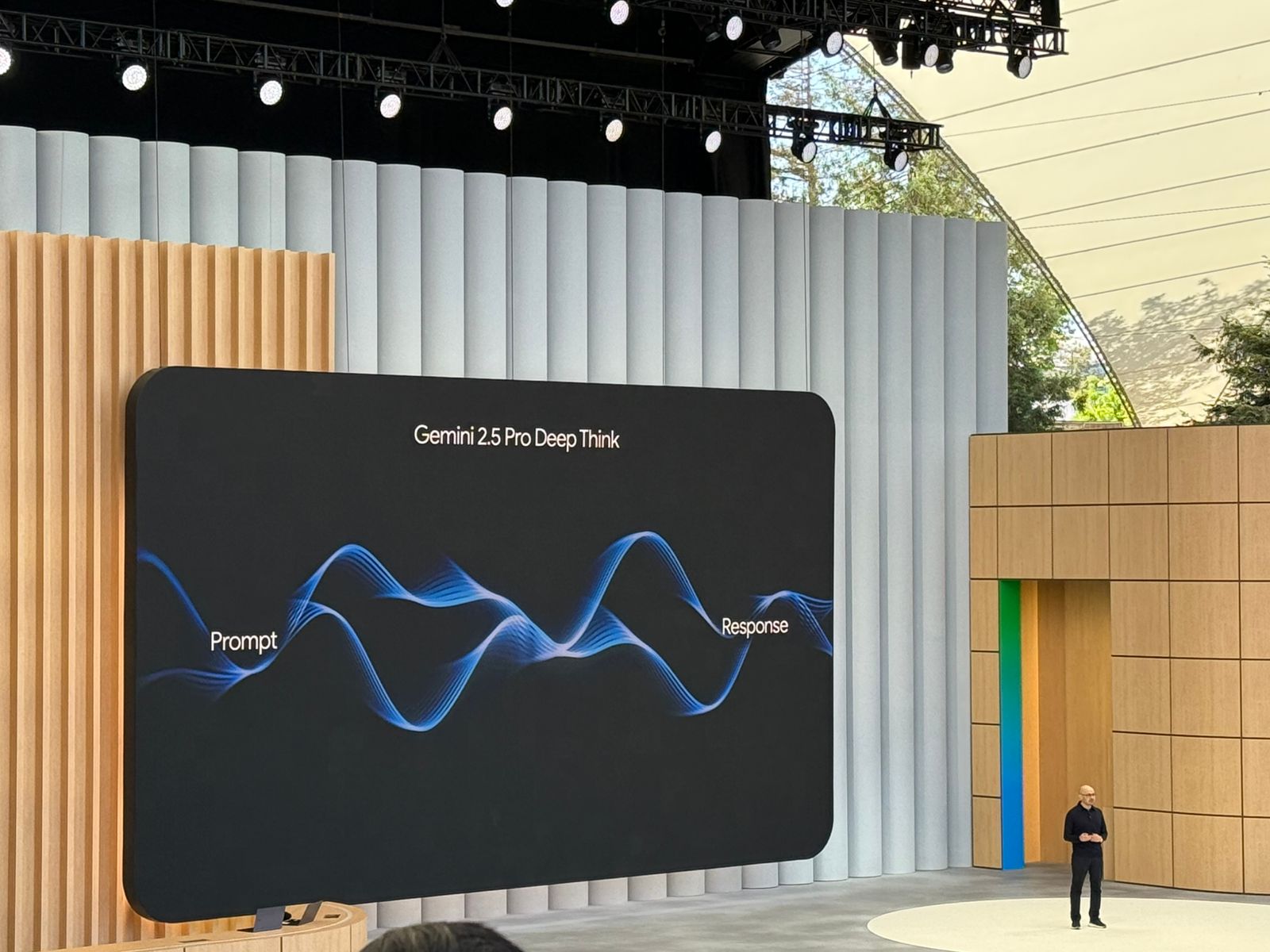

- Deep Think reasoning mode in Gemini 2.5 Pro

- Shopping in AI Mode, agentic checkout, and a virtual try-on tool

- New updates to Deep Research and Canvas

- Imagen 4

- Veo 3

- Synth ID detector

- Personalised smart replies in Gmail

- Speech translation in Meet

- Support for Anthropic's Model Context Protocol (MCP)

- Search Live in AI Mode and Lens

- Jules, an asynchronous AI coding agent

- Flow, a new AI filmmaking tool

- Beam, a 3D AI-first video communication platform

- Google AI Pro and AI Ultra subscription plans

- A look at Android XR-powered smart glasses

- Expanded access to AI Overviews

- Gemini in Google Chrome

- Expanded access to Gemini Live's camera and screen sharing capabilities

-

Sundar Pichai is back onstage talking about examples of how AI is helping people such as detecting wildfires and drone deliveries of critical medicine.

Android XR supports a broad spectrum of devices including headsets, smart glasses, and more. It is built in partnership with Samsung and optimised for Snapdragon Qualcomm. Samsung's Project Moohan will be available later this year.

Meanwhile, Glasses with Android XR will let Gemini see and hear the world. It works with your phone for hands-free access to apps.

Google AI Pro will be available globally with a full suite of AI products, higher limits, and Gemini Advanced. It is priced at $19.99/month. Meanwhile, Google AI Ultra is priced at $249.99/month AI subscription plan, offering higher usage limits and access to its top-tier AI models and features. AI Ultra is only available in the US for now.

Flow combines the best of Imagen, Veo, and Gemini. While the AI tool relies on the Veo and Imagen models for generating cinematic visuals, the Gemini integration is meant to make prompting more intuitive so that filmmakers can describe their vision in everyday language.

Google also launched a new Synth ID detector, a verification platform to help users identify whether a piece of content is AI-generated or now. Users have to just upload a piece of content and the SynthID Detector will identify if either the entire file or just a part of it has SynthID in it.

That means Veo 3, Google's AI video generator, can generate sound effects, background sounds, and dialogue.

The AI tool is available for Google Ultra subscribers in the US via the Gemini app and in Flow. It’s also available for enterprise users on Vertex AI.

Imagen 4 has been upgraded to generate 2k resolution images with clarity in fine details like intricate fabrics, water droplets, and animal fur. The AI-generated images are now available to download in a range of aspect ratios. Imagen 4 is also significantly better at spelling and typography, Google claimed. It is available today in the Gemini app, Whisk, Vertex AI and across Gemini Workspace.

Gemini is coming to the desktop version of Google Chrome but only for Google AI Pro and Google AI Ultra subscribers in the US. This enables users to ask Gemini to clarify or summarise any information on any webpage that users are reading. “In the future, Gemini will be able to work across multiple tabs and navigate websites on your behalf,” Google said.

Starting today, users can get a more customised Deep Research report by uploading their own private PDFs and images. "For instance, a market researcher can now upload internal sales figures (as PDFs) to cross-reference with public market trends, all within Deep Research," Google said.

On Canvas, users can use AI to generate interactive infographics, quizzes and podcast-style Audio Overviews in 45 languages.

The “try on” experimental feature is rolling out in Search Labs in the US today.

With AI Mode, users who search for products will be shown a browsable panel of images and product listings personalised to their taste. The righthand panel dynamically updates with relevant products and images, helping users pinpoint exactly what they’re looking for and discover new brands.

Google is bringing Project Astra’s live capabilities into Search. With Search Live, users can talk back-and-forth with Search about what they see in real-time, using their phone camera. For instance, if a student wants help with their project, they can tap the Live icon in AI Mode or Lens, point their camera, and ask a question.

AI Mode offers a more dynamic UI with a combination of text, images, and links. It uses a query fan-out technique to break down a user's question into subtopics and issuing a multitude of queries simultaneously. This enables Search to dive deeper into the web than a traditional search on Google, helping users discover more of what the web has to offer and find hyper-relevant content that matches a user's question.

AI Mode will soon be able to make its responses even more helpful with personalised suggestions based on past searches. Users can also opt-in to connect other apps such as Gmail with AI Mode. "Because your flight and hotel confirmations are in your inbox, it will sync when you actually be in Nashville (for instance)."

Personal Context in AI Mode is coming this summer. AI Mode will also get Deep Search to help users unpack a topic. It can issue hundreds of searches on a user's behalf to create a research report.

Complex data analysis and visualisations will also be possible via AI Mode. Project Mariner's agentic capabilities also coming to AI Mode to help users, for instance, find ticket options for sporting events.

AI Overviews is driving over 10% increase in usage of Google for the types of queries that show AI Overviews. People are coming to Google to ask more of their questions, including more complex, longer and multimodal questions, says Pichai.

"Deep Think uses our cutting edge research in thinking and reasoning including parallel techniques," says DeepMind's Demis Hassabis. Deep Think is currently being rolled out to Google's trusted testers.

Jules, an AI agent powered by Gemini 2.5 Pro and capable of autonomously reading and generating code, makes its debut at Google I/0 2025. Users can integrate Jules directly into their existing code repositories. The AI coding agent then makes a clone of the user’s codebase within Google Cloud virtual machine (VM) to ‘understand’ the context of the project and perform tasks such as writing tests, building new features, fixing bugs, etc.

Google is introducing a preview version of audio-visual input and native audio output dialogue for both Gemini 2.5 Flash and Pro. It allows the user to steer the AI-generated tone, accent and style of speaking. For example, you can tell the model to use a stronger voice or whisper when telling a story. It can also switch between languages (say from English to Hindi) mid-speech.

The text-to-speech capability is now available in the Gemini API.

Tulsee Doshi, Senior Director, Product Management at Google DeepMind, onstage talking about the updated versions of Gemini 2.5 Flash and Pro.

"AI is making an amazing new future possible. Gemini 2.5 Pro is our most intelligent model yet, and we’re truly impressed with what we’ve created. It can build entire apps from sketches. It also leads in learning and ranks number one across multiple benchmarks. I’m thrilled to announce Gemini 2.5 Flash, which comes with enhanced capabilities. Flash will be generally available in early June, and we’re making final adjustments based on your feedback," said Google DeepMind chief Demis Hassabis.

Google launches personalized smart replies in Gmail. It will help users draft emails that match their specific context and

tone. Available in Gmail this summer for subscribers. It pulls from a user's past emails and Google Drive to provide suggestions that are more relevant to the user.

Using Agent Mode, the Gemini app can help users, for instance, find real-estate listings. Gemini uses MCP (Model Context Protocol) to access the listings and even schedule a tour on your behalf. Coming soon to subscribers.

The new AI feature on Google Meet translates a user's words into their listener’s preferred language — in near real time, with low-latency.

Google Beam, is an AI-first video communication platform that has evolved from Project Starline, which Pichai said brings the world closer to having a “natural freeflowing conversation across languages”. Beam instantly translates spoken languages in “real-time, preserving the quality, nuance, and personality in their voices”.

As expected, Google’s annual conference this time is focused on AI, with the search and advertising giant showcasing a lot of new products and updates. “There's a hard trade off between price and performance. Yet, we'll be able to deliver the best models at the most effective price point... we are in a new phase of AI product shift,” Pichai said in his opening keynote.

"The world is adopting AI faster than ever before. There's one marker of progress. This time last year, we were processing 9.7 trillion tokens a month across our products and APIs. Now we are processing 480 trillion monthly tokens. That's about a 50 x increase in just a year," says Pichai

Adobe seems to have some serious competition coming its way.

The countdown seems powered by Google’s image creation tolls

There's a little over 28,000 folks tuned into the livestream right now. Google CEO Sundar Pichai should be taking the stage at any moment now so get your last-minute predictions in. You can also watch the keynote interpreted in American Sign Language here.

That is something worth keeping an eye out for at this year's Google I/O event, which is being held at a time when the tech giant is staring down at the possibility of its search business being broken up by a US district court on the proposal of the US government. On whether AI chatbots could replace search engines, Sundar Pichai had said in his testimony that while it was not a zero-sum battle between the two products, AI “is going to deeply transform Google Search.”

Read the top five moments from Sundar Pichai's testimony in the Google antitrust remedies trial here.

A day ahead of Google I/O 2025, the tech giant announced it is launching its widely popular AI offering NotebookLM as an app for both Android and iOS devices. Not just that, Google also said that users will also be able to download the acclaimed AI-generated podcasts created using Notebook LM for offline listening. Wondering how to use the app? Follow the steps mentioned here.

On the morning of Day 1 of the Google I/O developer conference, Apple has announced that its annual Worldwide Developers Conference (WWDC 2025) will be taking place from June 9-13 this year. Just yesterday, Microsoft held its own conference (Build 2025) where CEO Satya Nadella announced a series of sweeping updates that reflect the tech giant's vision for an open agentic future.

At 10:30pm IST, Google CEO Sundar Pichai is expected to take the stage to share the new wave of AI products being shipped by the company. Stay tuned.

The gates are open at Shoreline Amphitheatre as attendees start arriving for Google I/O 2025. With an hour to go before Sundar Pichai takes the stage, excitement is already in the air. Expect big updates around AI, Gemini, Android, and more.

Stay tuned for all the action—live from Mountain View.

(Image: Nandagopal Rajan/The Indian Express)

Only a few hours left for Google to commence its much-awaited I/O 2025 event. The tech giant is expected to introduce a slew of AI offerings tonight including the rumoured 'Gemini Ultra plan for Google One. There are no details available yet about the new plan. Currently, the free plan includes Gemini Proi for basic tasks like writing, multimodal input, summarisation, etc. The premium plan at $19.99/month comes with Gemini Advanced featuring enhanced capabilities, 2TB storage, and integration with Google apps like Gmail and Docs.

The highly anticipated Google I/O event may also see Google detailing its Project Mariner, an experimental AI agent showcased last year. This is an agent that has been designed to autonomously navigate and interact with web pages on behalf of users. While functioning as a Chrome browser extension, the browser can read, click, scroll, and even fill information into forms. The AI agent is reportedly built upon Gemini 2.0 offering it some advanced multimodal understanding.

Its comprehension abilities allow it to perform complex tasks such as adding items to an online shopping cart, planning travel itineraries, offering users real-time updates, and maintaining control over the entire process. As of now, Project Mariner is in a testing phase with a select group of users. Reportedly, the search giant is ensuring user safety before its rollout. With advanced AI capabilities coming to the browsing experience, Project Mariner seems like a significant step toward more intuitive and efficient human-machine interactions.

With Google hyping up Project Astra and Android XR, it looks like we might finally see a demo of Project Moohan, Samsung's first mixed reality headset. While the company's extended reality OS did not get much screentime during last week's The Android Show, Android head Sameer Samat hinted that we may see some sort of prototype smart glasses during this year's Google I/O. With Samsung gearing up to launch its mixed reality headset later this year, this may be Google's best chance to share what its new operating system is capable of.

During The Android Show livestream, Google announced that it has big plans for WearOS. Like Android 16, the Material 3 Expressive visual upgrades will be making their way to WearOS 6, modernising the quite dated looking user interface.

This means that we will see more rounded and less rounded UI elements as well as new animations that make better use of the screen. The new user interface also brings glanceable information, making it easier for users to go through information like messages and calendar appointments.

Google also said that it is "continuing to improve performance and optimize power", which roughly translates to 10 per cent more battery life on Pixel watches. However, the biggest news is that the tech giant is finally bringing Gemini to WearOS powered devices like the Samsung Galaxy Watch 7 and the Galaxy Watch Ultra.

Google is reportedly working on a new Pinterest-like feature that will show "images deisgn to give people ideas for fashion or interior design", according to The Information.

However, it is still unclear if Google will allow users to upload their own image or simply view other people's collections. Also, there is no news if the tech giant plans to integrate the Pinterest-like functionality into an existing Google service or if it emerges as a standalone website or app. the move may enable Google to offer a more interesting way to get search results and may help the company generate revenue from ads.

Rumour has it that Google One, the company's subscription service will be getting a bunch of new AI plans. Earlier this month, known tipster TestingCatalog, who shared a post on X claiming that Google was preparing to introduce two new plans - Gemini Pro and Gemini Ultra.

These subscription plans maybe available alongside the existing Google Advanced bundle, which offers access to the company's most capable AI models and comes with 2TB of cloud storage. As for pricing, it looks like the upcoming plans will be more expensive than Google's current AI Premium plan.

Google has already shared what Android 16 and WearOS 6 looks like at The Android Show last week, meaning the tech giant might mostly talk about new features coming to Gemini alongside some other AI news.

The tech giant is also steadily integrating AI with search by introducing features like Overview, so it will be interesting to see how much Google's AI feature set has improved in the last year and where's its going next.

https://platform.twitter.com/widgets.jsHaving a deep think... pic.twitter.com/oZPH2jAWyV

— Sundar Pichai (@sundarpichai) May 20, 2025

pic.twitter.com/oZPH2jAWyV

— Sundar Pichai (@sundarpichai) May 20, 2025

A few hours before Google I/O starts, Google CEO Sundar Pichai shares a picture of him and Deepmind head Demis Hanssabis standing in the Shoreline Amphitheater in Mountain View, California.

Ahead of the annual developer conference, Google announced Android 16, the latest version of the world’s most popular mobile operating system. The upcoming version of Android will use “Material 3 Expressive”, a new design language that brings several changes to the user interface.

According to Mindy Brooks, the Senior Director at Android Platform, Material 3 Expressive brings “fluid, natural, and springy animations” to the interface. For example, when users dismiss a notification, they will see a smooth detached transition followed by a haptic feedback.