Written by Urvish Kothari

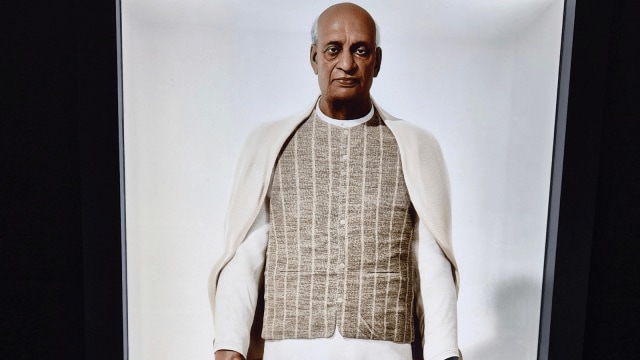

“Have a question for Sardar Patel? Culture Ministry has some answers,” says a report in this newspaper dated September 22. The story is about the AI avatar of Sardar Vallabhbhai Patel, the first Deputy Prime Minister of India, installed at the Prime Ministers’ Museum and Library (PMML). Visitors can ask questions in the holobox, as the system is called, and receive answers in the voice of Sardar Patel. Both the answer and the voice are generated by AI.

This should instantly sound an alarm bell to anyone concerned with the authenticity and nuances of history. The dangers of using AI for complex historical narratives are greater than the instant engagement and gratification it provides.

There is definitely a case for using AI avatars for museums, and they are already in use in some museums worldwide, along with other modern technologies. But such avatars generally engage in non-controversial conversation with a limited scope. Usually, they are designed in such a way that distortions — whether due to the technology itself or the host institution — do not arise. Sardar Patel’s life and times involve many contentious issues which have already been distorted for political advantage. Given this background, there are concerns that Patel’s AI avatar could become prone to manipulation and used for the convenient representation of facts that don’t suit the current dispensation.

Take, for instance, Patel’s banning of the Rashtriya Swayamsewak Sangh (RSS) after Gandhi’s assassination. The AI avatar, according to the report, had to say this on the subject: “I suggested imposing a ban on the RSS in the aftermath of Gandhi’s assassination with a clear intention to investigate the truth. After a thorough investigation, I concluded that RSS as an organisation had no part in the conspiracy to kill Mahatma Gandhi… After imposing the ban, I engaged with the RSS leadership, including ‘Guruji’ M S Golwalkar, and sought assurances that the organisation would work within the framework of the Constitution.”

This is a virtual clean chit to the RSS, attributed to Sardar Patel — these are words he never spoke or wrote in such a manner. He never addressed Golwalkar as “Guruji”. The AI avatar’s response also omits that the lifting of the ban was conditional and that the RSS did not fulfil some of those conditions.

There are many serious concerns for creating an AI conversation model of a historical personality, irrespective of which government or which party wants to install it.

Training Bias

The AI model works without human intervention once it is trained. But the real catch lies with the training part. For example, Patel calling Golwalkar “Guruji” might indicate a positive bias towards Golwalkar during the training period.

Similarly, if historical records with a heavy bias or extremist ideologies are included in training the model, the AI avatar might perpetuate biases or overlook certain viewpoints, especially marginalised ones.

Misinterpretation and Hallucination

Even if AI models are trained on accurate historical information, the nuances of a historical figure’s life work, thoughts, speech, and actions are often interpreted through various lenses. An AI avatar may oversimplify or misinterpret these aspects. AI does not derive the answers verbatim from the data, especially when it comes to nuanced or interpretative questions. Current AI models are infamous for hallucinating, i.e. fabricating incorrect details or completely imagining data and even sources, and presenting them as concrete facts. All these have the potential to distort history.

Flattened Narrative

When asked about his relationship with Gandhi, the AI avatar of Sardar Patel gave an answer that was not incorrect but lacked nuance. (It did not mention that he entered the freedom movement due to Gandhi.) The AI avatar is prone to reducing complex issues and relationships to oversimplified one-dimensional answers.

Lack of Authenticity

Even assuming that the AI avatar speaks exactly the words from the original text, the tone, stress, and many such nuances are going to be misrepresented in all likelihood. Let’s assume someone makes an AI avatar of Gandhi, recreating his voice just to read his autobiography in his own words. It won’t do justice to Gandhi. Because Gandhi himself might read the same text differently in different situations. If this is the case with authentic text, just imagine how disastrous it might be in the “What if?” questions.

Copyright Issues

The fundamental question of AI avatars of historical figures is: Who owns the rights to represent them digitally? Who can make digital avatars of such figures? AI avatars can be trained from the copyrighted material, with the argument of fair use, especially by governments that might not be interested in monetising them. However, any misrepresentation by the AI avatar can be challenged by those who own the copyright of the text or the custodians of the moral rights of the deceased historical figure. Unfortunately, there are no specific legal guidelines on this.

The writer is author of A Plain Blunt Man: The Essential Sardar Vallabhbhai Patel